Doctors facing an onslaught of AI-generated patient care denials from insurance companies are fighting back — and they’re using the same technology to automate their appeals.

Prior authorization, where doctors must get permission from insurance companies before providing a medical service, has become “a nightmare,” according to experts. Now, it’s becoming an AI arms race.

“And, who loses? Yup, patients,” said Dr. Ashish Kumar Jha, dean of the School of Public Health at Brown University. More often than not, clinicians have historically simply given up once their appeals are denied.

Jha, who is also a professor of Health Services, Policy and Practices at Brown and served as the White House COVID-19 response coordinator in 2022 and 2023, said that while prior authorization has been a major issue for decades, only recently has AI been used to “turbocharge it” and create batch denials. The denials force physicians to spend hours each week challenging them on behalf of their patients.

Generative AI (genAI) is based on large language models, which are fed massive amounts of data. People then train the model on how to answer queries, a technique known as prompt engineering.

“So, all of the [insurance company] practices over the last 10 to 15 years of denying more and more buckets of services — they’ve now put that into databases, trained up their AI systems and that has made their processes a lot faster and more efficient for insurance companies,” Jha said. “That has gotten a lot of attention over the last couple of years.”

While the use of AI tools by insurance companies is not new, the launch of OpenAI’s ChatGPT and other chatbots in the last few years, allowed genAI to fuel a huge increase in automated denials, something industry analysts say they saw coming.

Four years ago, research firm Gartner predicted a “war will break out” among 25% of payers and providers resulting from competing automated claim and pre-authorization transactions. “We now have the appeals bot war,” Mandi Bishop, a Gartner CIO analyst and healthcare strategist, said in a recent interview.

A painful process for all

The prior authorization process is painful for all sides in the healthcare community, as it’s manually intensive, with letters moving back and forth between fax machines. So, when health insurance companies saw an opportunity to automate that process, it made sense from a productivity perspective.

When physicians saw the same need, suppliers of electronic health record technology jumped at the chance to equip their clients with the same genAI tools. Instead of taking 30 minutes to write up a pre-authorization treatment request, a genAI bot can spit it out in seconds.

Because the original pre-authorization requests — and subsequent appeals — contain substantive evidence to support treatment based on a patient’s health record, the chatbots must be connected to the health record system to be able to generate request.

EPIC, one of the largest electronic health record companies in the United States, has rolled out genAI tools to handle prior-authorization requests to a small group of physicians who are now piloting it. Several major health systems are also currently trying out an AI platform from Doximity.

Dr. Amit Phull, chief physician experience officer for Doximity, which sells a platform with a HIPAA-compliant version of ChatGPT, said the company’s tech can drastically reduce the time clinicians spend on administrative work. Doximity claims to have two million users, 80% of whom are physicians. Last year, the company surveyed about 500 clinicians who were piloting the platform and found it could save them 12 to 13 hours a week in administrative work.

“In an eight-hour shift in my ER, I can see 25 to 35 patients, so if I was ruthlessly efficient and saved those 12 to 13 hours, we’re talking about a significant increase in the number of patients I can see,” Phull said.

Clinicians who regularly submit prior authorization requests complain the process is “purposefully opaque” and cumbersome, and it can sometimes force doctors to choose a different course of treatment for patients, according to Phull. At the very least, clinicians often get caught in a vicious cycle of pre-authorization submission, denial, and appeal — all of which require continuous paperwork tracking while keeping a patient up to date on what’s going on.

“What we tried to do is take this technology, train it specifically on medical documentation, and bring that network layer to it so that physicians can learn from the successes of other clinicians,” Phull said. “Then we have the ability to hard wire that into our other platform’s technologies like digital fax.”

Avoiding ‘mountains…of busywork’

For physicians, the need to reduce the work involved in appealing prior authorization denials “has never been greater,” according to Dr. Jesse M. Ehrenfeld, former president of the American Medical Association.

“Mountains of administrative busywork, hours of phone calls, and other clerical tasks tied to the onerous review process not only rob physicians of face time with patients, but studies show also contribute to physician dissatisfaction and burnout,” Ehrenfeld wrote in a January article for the AMA.

More than 80% of physicians surveyed by the AMA said patients abandon treatment due to authorization struggles with insurers. And more than one-third of physicians surveyed by the AMA said prior authorization fights have led to serious adverse outcomes for patients in their care, including avoidable hospitalizations, life-threatening events, permanent disabilities, and even death.

Ehrenfeld was writing in response to a new rule by the Centers for Medicare & Medicaid Services (CMS) due to take effect in 2026 and 2027 that will streamline the electronic approval process for prior authorization requests.

In 2023, nine states and the District of Columbia passed legislation that reformed the process in their jurisdictions. At the start of 2024, there were already more than 70 prior authorization reform bills of varying types among 28 states.

Earlier this month, Jha appeared before the National Conference of State Legislators to discuss the use of genAI in prior authorization. Some legislators feel the solution is to ban the use of AI for prior authorization assessments. Jah, however, said he doesn’t see AI as the fundamental problem.

“I see AI as an enabler of making things worse, but it was bad even before AI,” Jha said. “I think [banning AI] — it’s very much treating the symptom and not the cause.”

Another solution legislators have floated would force insurance companies to disclose when they use AI to automate denials, but Jha doesn’t see the purpose behind that kind of move. ‘Everyone is going to be using it, so every denial will say it used AI,” he said. “So, I don’t know that disclosure will help.”

Another solution offered by lawmakers would get physicians involved in overseeing the AI algorithm insurance companies use. But Jha and others said they don’t know what that means — whether physicians would have to oversee the training of LLMs and monitor their outputs or whether it would be left to a technology expert.

“So, I think states are getting into the action and they recognize there’s a problem, but I don’t think [they] have figured out how to address it,” Jha said.

Jha said policy makers need to think more broadly than “AI good versus AI bad,” and instead see it as any technology that has plusses and minuses. In other words, its use shouldn’t be over regulated before physicians, who are already wary of the technology, can fully grasp its potential benefits.

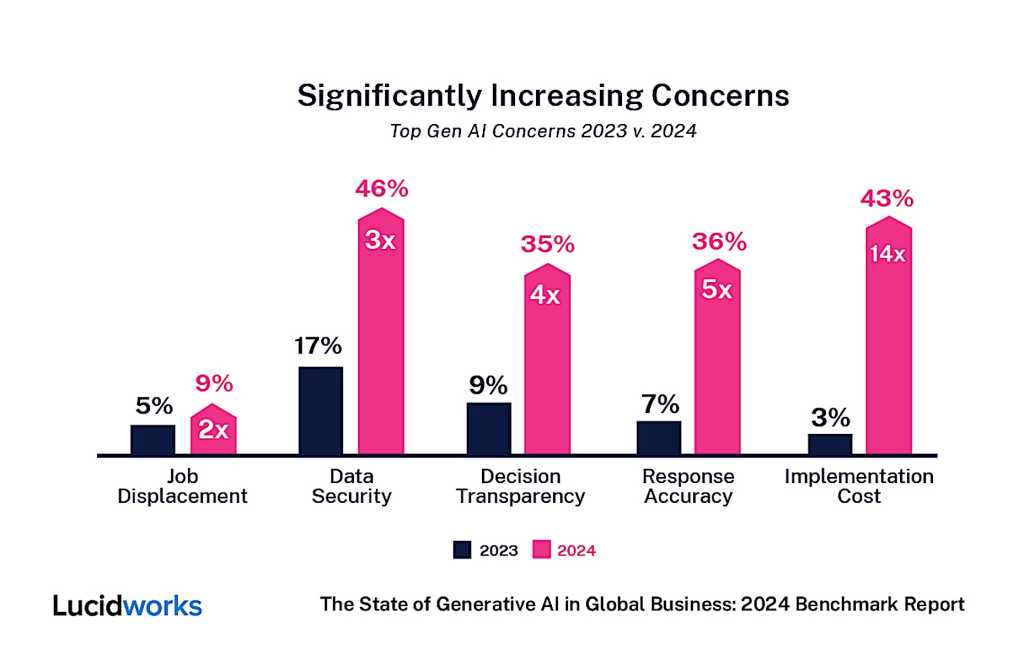

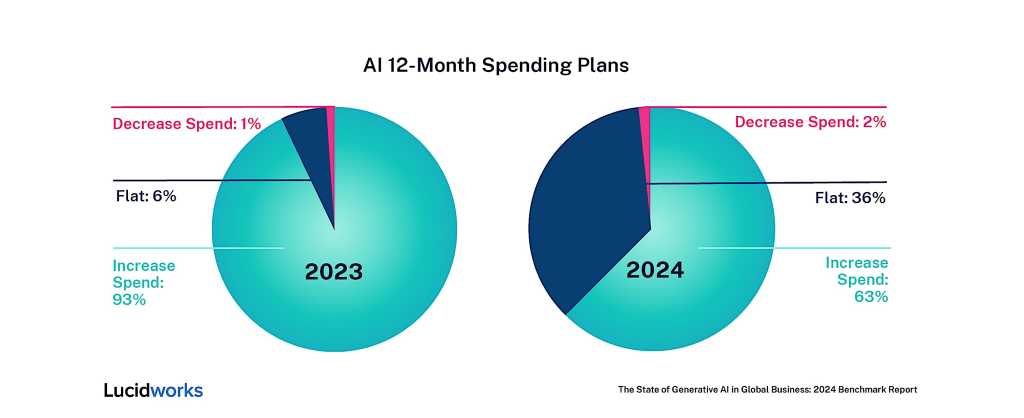

Most healthcare organizations are acting as slow followers in deploying AI because of potential risks, such as security and data privacy risks, hallucinations, and erroneous data. Physicians are only beginning to use it now, but those who do have become a very vocal minority in praising its benefits, such as creating clinical notes, handling intelligent document processing, and generating treatment options.

“I’d say it’s got to be less than 1% of physicians,” Jha said. “It’s just that if there are a million doctors out there and it’s 1% of them, then that’s 10,000 doctors using AI. And they’re out there publicly talking about how awesome it is. It feels like all the doctors are using AI, and they’re really not.”

Last year, UnitedHealthcare and Cigna Healthcare faced class-action lawsuits from members or their families alleging the organizations had used AI tools to “wrongfully deny members’ medical claims.”

In Cigna’s case, reports claimed it denied more than 300,000 claims over two months in 2022, which equated to 1.2 seconds of review per claim on average. UnitedHealthcare used an AI-based platform called nH Predict from NaviHealth. The lawsuit against it claimed the technology had a 90% error rate, overriding physicians who said the expenses were medically necessary. Humana was later also sued over its use of nH Predict.

The revelations that emerged from those lawsuits led to a lot of “soul searching” by the federal CMS and healthcare technology vendors, according to Gartner’s Bishop. Health insurance firms have taken a step back.

According to Bishop, since the batch denials of claims drew the attention of Congress, there has been a significant increase in healthcare to “auto-approve” treatment requests. Even so, Jha said batch denials are still common and the issue is likely to continue for the foreseeable future.

“These are early days,” Jha said. “I think [healthcare] providers are just now getting on board with AI. In my mind, this is just round one of the AI-vs-AI battle. I don’t think any of us think this is over. There will be escalation here.”

“The one person I didn’t talk about in all this is the patient; they’re the ones who get totally glossed over in this.”