Artificial Intelligence (AI) has rapidly transformed the chip industry since its mainstream arrival over the past two years, driving demand for specialized processors, accelerating design innovation, and reshaping global supply chains and markets.

The generative AI (genAI) revolution that began with OpenAI’s release of ChatGPT in late 2022 continues to push the limits of AI inference, large language models (LLMs) and semiconductor technologies. In short order, traditional CPUs, insufficient for AI’s parallel processing needs, have given way to specialized chips: GPUs, TPUs, NPUs, and AI accelerators.

That prompted companies such as Nvidia, AMD, and Intel to expand their portfolios to include AI-optimized products, with Nvidia leading in GPUs for AI training and inference. And because AI workloads prioritize throughput, energy efficiency, and scalability, the larger tech industry has seen massive investments in data centers, with AI-focused chips like NVIDIA’s H100 and AMD’s MI300 now powering the backbone of AI cloud computing.

At the same time, companies such as Amazon, Microsoft, and Google have developed custom chips (such as AWS Graviton and Google TPU) to reduce dependency on external suppliers and enhance AI performance.

In particular, the AI revolution propelled has propelled growth at Nvidia, making it as a dominant force in the data center marketplace. Once focused on producing chips for gaming systems, the company’s AI-driven hardware and software now outpaces those efforts, which has led to remarkable financial gains. The company’s market capitalization topped $1 trillion in May 2023 — and passed $3.3 trillion in June 2024, making it the world’s most valuable company at that time.

The AI-chip industry, however, is about to change dramatically. Over the past several years, semiconductor developers and manufacturers have focused on supplying the data center needs of hyperscale cloud service providers such Amazon Web Services, Google Cloud Platform and Microsoft Azure; organizations have relied heavily on those industry stalwarts for their internal AI development.

There’s now a shift toward smaller AI models that only use internal corporate data, allowing for more secure and customizable genAI applications and AI agents. At the same time, Edge AI is taking hold, because it allows AI processing to happen on devices (including PCs, smartphones, vehicles and IoT devices), reducing reliance on cloud infrastructure and spurring demand for efficient, low-power chips.

“The challenge is if you’re going to bring AI to the masses, you’re going to have to change the way you architect your solution; I think this is where Nvidia will be challenged because you can’t use a big, complex GPU to address endpoints,” said Mario Morales, a group vice president at research firm IDC. “So, there’s going to be an opportunity for new companies to come in — companies like Qualcomm, ST Micro, Renesas, Ambarella and all these companies that have a lot of the technology, but now it’ll be about how to use it.

“This is where the next frontier is for AI – the edge,” Morales said.

Turbulence in the market for some chip makers

Though global semiconductor chip sales declined in 2023 by about 11%, dropping from the previous year’s record of $574.1 billion to around $534 billion, that downturn did not last. Sales are expected to increase by 22% in 2025, according to Morales, driven by AI adoption and a stabilization in PC and smartphone sales.

“If you’re making memory or making an AI accelerator, like Nvidia, Broadcom, AMD or even Marvel now, you’re doing very well,” Morales said. “But if you’re a semiconductor company like an ST Micro, Infinium, Renesas or Texas Instruments, you’ve been hit hard by excess inventory and a macroeconomy that’s been uncertain for industrial and automobile sectors. Those two markets last year outperformed, but this year they were hit very hard.”

Most LLMs used today rely on public data, but more than 80% of the world’s data is held by enterprises that won’t share it with platforms like OpenAI or Anthropic, according to Morales. That trend benefits processor companies, especially Nvidia, Qualcomm, and AMD. Highly specialized System on a Chip (SoC) technology with lower price points and more energy efficiency will begin to dominate the market as organizations bring the tech in-house.

“I think it’s definitely going to change the dynamics in the market,” Morales said. “That’s why you’re seeing a lot of companies aligning themselves to address the edge and end points with their technology. I think that’s the next wave of growth you’re going to see along with the enterprise; the enterprise is adopting their own data center approach.”

Intel will continue to find a safe haven for its processors in PCs, and its decision to outsource manufacturing to TSMC has kept it competitive with rival AMD. But Intel is likely to struggle to keep pace with other chip makers in emerging markets.

“Outside of that, if you look at their data center business, it’s still losing share to AMD and they have no answer for Nvidia,” Morales said.

While Intel’s latest line of x86 and Gaudi AI accelerators are designed to compete with Nvidia’s H100 and Blackwell GPUs, Morales sees them more as a “stop gap” effort —not what the market is seeking.

“I do believe on the client side there’s an opportunity for Intel to take advantage of a replacement cycle with AI working its way into PCs,” he said. “They just received an endorsement from Microsoft for Copilot, so that gives their x86 line an opening; that’s where Intel can continue to fight until they recover from their transformation and all the changes that have happened at the company.”

To stay relevant in modern data centers — where Nvidia’s chips are driving growth — Intel and AMD will need to invest in GPUs, according to Andrew Chang, technology director at S&P Global Ratings.

“While CPUs remain essential, Nvidia dominates the AI chip market, leaving AMD and Intel struggling to compete,” Chang said. “AMD aims for $5 billion in AI chip sales by 2025, while Intel’s AI efforts, centered on its Gaudi platform, are minimal. Both companies will continue investing in GPUs and AI accelerators, showing some incremental revenue growth, but their share of the data center market will likely keep declining.”

Politics, the CHIPS Act and what happens after Jan. 20

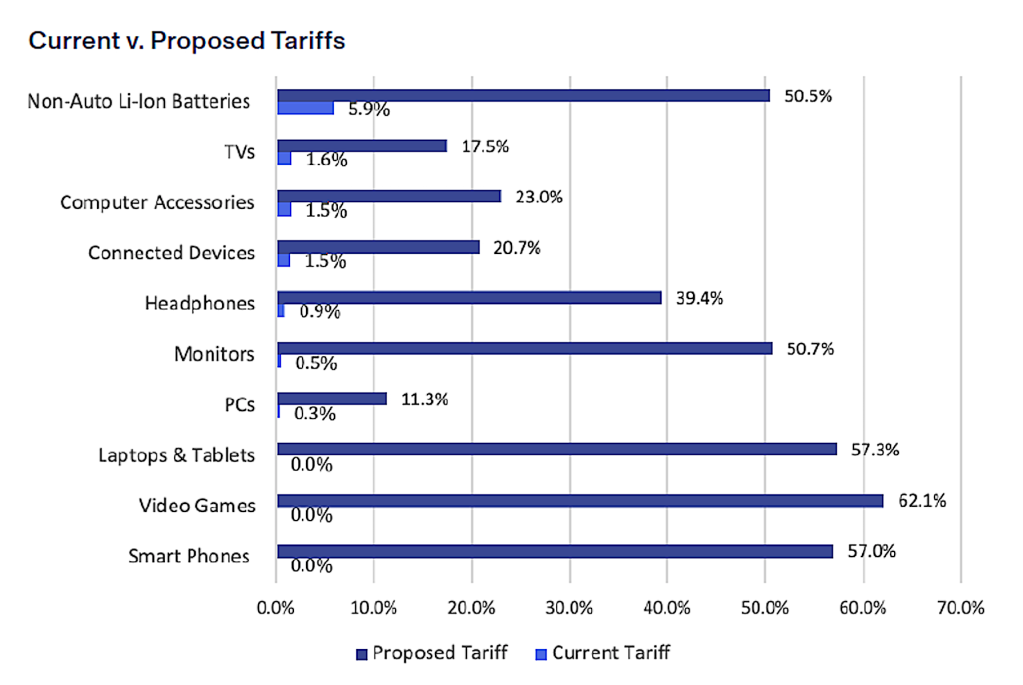

Geopolitical and economic factors such as export restrictions, supply chain disruptions, and government policies, could also reshape the chip industry. President-elect Donald J. Trump, who takes office Jan. 20, has signaled he plans to impose heavy tariffs on chip imports.

The CHIPS and Science Act is also promising billions of dollars to semiconductor developers and manufacturers who locate operations in the US. Under the Act, $39 billion in funding has been earmarked for several companies, including TSMC, Intel, Samsung and Micron — all of whom have developed plans for, or are already building, new fabrication or research facilities.

But in order for tax dollars to be divvied out, each company must meet specific milestones; until that time the monies remain unspent. While the promise of billions of dollars in incentives are unquestionably helping reshore US chip production, Morales pointed to the CHIPS Act’s 25% tax break as a greater benefit.

“Even a company like Intel…is getting about $50 billion dollars [in tax breaks], which is unheard of. That’s where the winning payouts are,” he said.

Though Trump has signaled that government funding to encourage reshoring is the wrong tactic, industry experts do not believe the CHIPS Act will be drastically cut when he regains office. “We expect modest revisions to the CHIPS Act, but not something drastic as cutting funding yet to be dispersed,” Morales said. “The CHIPS Act received bipartisan support and any attempt to revise this would face pushback from states that stand to benefit, such as Arizona and Ohio.”

Though high-end processors to power energy-sucking cloud data centers have dominated the market to date, energy-efficient AI processors for edge devices will likely continue to gain traction.

“Think about an AI PC this year or a smartphone that incorporates AI as well, or even a wearable device that has a smaller, more well-tuned model that can leverage AI inferencing,” Morales said. “This is where we’re going next, and I think it’s going to be very big over the coming years.

“And, I think AI inferencing, as a percentage of the companies, will be as big if not bigger than what we’ve seen in the data center, so far,” he added.

From LLMs to SLMs and edge devices

Enterprises and other organizations are also shifting their focus from single AI models to multimodal AI, or LLMs capable of processing and integrating multiple types of data or “modalities,” such as text, images, audio, video, and sensory input. The input from diverse resources creates a more comprehensive understanding of that data and enhances performance across tasks.

Over 80% of organizations expect their AI workflows to increase in the next two years, while about two-thirds expect pressure to upgrade IT infrastructure, according to a report by S&P Global.

Sudeep Kesh, chief innovation officer at S&P Global Ratings, noted that AI is evolving towards smaller, task-specific models, but larger, general-purpose models will still be essential. “Both types will coexist, creating opportunities in each space,” he said.

A key challenge will be developing computationally and energy-efficient models, which will influence chip design and implementation. Chip makers will also need to address scalability, interoperability, and system integration — all of which are expected to drive technological advances across industries, improve autonomous systems, and enable future developments like edge AI, Kesh said.

In particular, as companies move away from cloud-based LLMs and embrace smaller language models that can be deployed on edge devices and endpoints, the industry will see increased interest in AI inferencing.

“It’s an environment where it’s feast or famine for the industry,” IDC’s Morales said. “What’s in store for the coming year? I think the growth we’ve seen in the data center been phenomenal and it will continue into 2025. What I’m excited about is enterprises are beginning to look at prioritizing IT spending dollars in AI, and that will break a second wave of demand for processors.”