Sometimes a Windows installation simply goes off the rails. Menus don’t open properly, icons start moving around the desktop, File Explorer acts up, apps get weird, and so forth. Enough things can go wrong, or turn strange, that it’s important to understand various basic Windows repair strategies.

Over the past decade, one of the chief techniques in my own repair arsenal for Windows 10 and 11 has been what’s sometimes called an “in-place upgrade install” or an “upgrade repair install.” (Spoiler alert! This is absurdly easy to do in Windows 11 version 23H2 and later.)

Before going into the details of how to perform such a maneuver, let’s start with a definition and some explanation.

What is an in-place upgrade install?

An in-place upgrade install involves using the Windows OS installer to replace all the operating system files for Windows 10 or 11 on a PC. Basically, you’re using the installer’s setup.exe program to reinstall the same OS back over itself. This leaves user files entirely alone, retains many settings and preferences and, best of all, leaves already-installed apps and applications unchanged. It does, however, overwrite operating system files more or less completely. And in so doing, it often repairs a balky or misbehaving OS and returns it to normal, working condition.

It can take as little as 15 minutes to perform an in-place upgrade install. This maneuver doesn’t require much post-installation cleanup, tweaking, or follow-up activity, either.

Sounds too good to be true — what’s the catch?

Indeed, an in-place upgrade install can provide a quick and effective fix for many, many Windows problems and issues. I use this technique regularly, particularly when I notice that a system is starting to misbehave yet proves resistant to basic repair techniques, such as running the system file checker (SFC) or using the deployment image servicing and management (DISM) image cleanup capabilities.

But an in-place upgrade install is not a universal panacea, and it doesn’t work to cure all Windows ills, either. It is particularly powerless to reverse changes to the Windows registry. (That’s one reason why Microsoft advises users to steer clear or take a registry snapshot they can restore later if they must go where angels fear to tread.)

Here are some additional key considerations that determine the suitability of an in-place upgrade install for a Windows installation.

Requirements for an in-place upgrade install

- You must be logged into an administrative account to perform an in-place upgrade install.

- Windows 10 or 11 must be running (and keep running) so that you can run the setup.exe installer from inside Windows itself. You cannot run an in-place upgrade install using a bootable Windows installer or when Windows is booted into Safe Mode. (This is what’s called a “clean install,” and if you take this route, it does not save apps, applications, or any settings or preferences.)

- You will need at least 9GB plus whatever disk space Windows is using on the drive where it’s running to perform an in-place upgrade install. That’s because the installer renames the running version to Windows.old and lays down a whole new Windows folder for the upgrade it copies to disk. That extra ~9GB or so is needed for work space during the install process.

- The Windows installer you use must be the same edition (Home, Pro, Education, or Enterprise), the same language (for example, en-US for United States English, en-GB for British English), the same “bittedness” (32- or 64-bit for Windows 10; Windows 11 is 64-bit only), and the same build (or newer) as the Windows image it upgrades and repairs.

Post-installation checklist

Please note further that some cleanup or customization may be required once the in-place upgrade install has completed. You should check all these things, some of which may require some additional time and effort to complete:

- Custom fonts and customized system icons will be absent following an in-place upgrade install. If you want them back, you’ll have to restore them manually.

- Wi-Fi connections may need to be re-established (including providing SSIDs and passwords). Occasionally, networks may change from Private to Public and will have to be reset properly.

- Windows Update will only be current as of the date of the image file used for the in-place upgrade install. All subsequent updates must then be applied from Windows Update to make the new installation completely current.

- By default, Windows turns System Protection off. After an in-place upgrade install, System Protection must be turned on to enable capture and use of restore points if you want them.

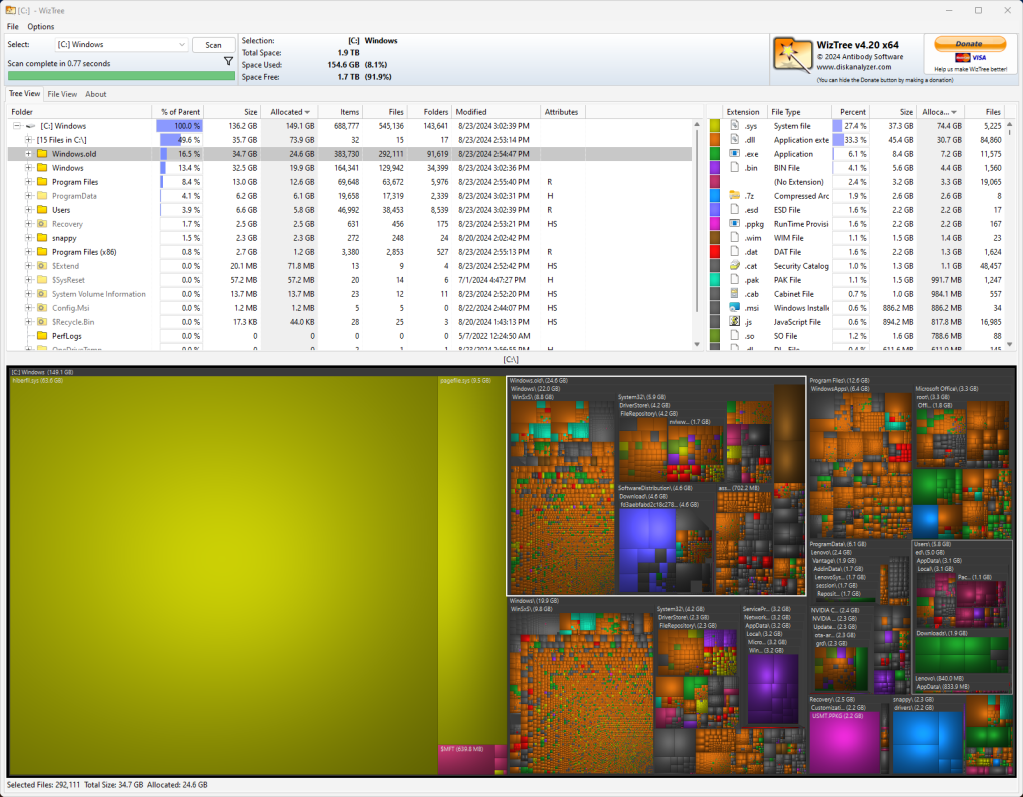

- The previous installation’s OS files in the Windows.old folder consume substantial disk space. Once things are working properly, run Disk Cleanup as Administrator to clear out those old files and recover the up to 35GB of disk space they typically consume. (You can see that folder represented in the WizTree disk space analyzer in Figure 1.)

Figure 1: On a Windows 11 test PC, the Windows.old folder (outlined in white at top center) comes in at 34.7GB after an in-place repair install.

Ed Tittel / IDG

Once you’ve chewed through this list and pondered all the potential gotchas, performing an in-place upgrade install is easy — ridiculously so in Windows 11 and straightforward in Windows 10 with the right Windows ISO in hand.

Windows 11: Click one button to perform an in-place upgrade install

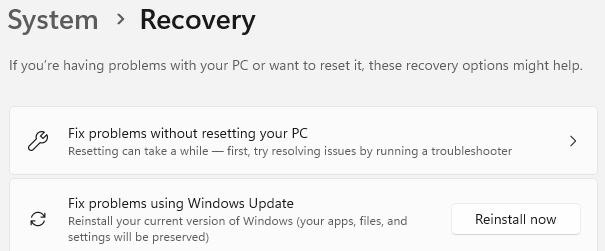

For versions 23H2 or later, you need only navigate to Settings > System > Recovery, then click the Reinstall now button next to “Fix problems using Windows Update,” as shown in Figure 2. It uses Windows Update for whatever version of Windows 11 you’ve got installed, matching the base version and installed updates exactly. It’s literally a one-button upgrade option.

Figure 2: Click “Reinstall now” and Windows Update does the rest.

Ed Tittel / IDG

That said, this does take some time: I’ve had it take anywhere from 35 to 110 minutes to complete on various test machines. (Newer PCs with fast CPUs finished faster than older PCs with slower ones.) For that reason, Windows 11 users might wish to use the speedier ISO installation method described next, which also works for Windows 11 versions prior to 23H2 and for Windows 10.

Windows 10 or 11: Perform an in-place upgrade install from an ISO or USB media

An ISO, also called an “ISO image,” is a large single file that originally represented the contents of an entire optical disk — a CD, DVD, or Blu-ray Disc. This format is well-suited for installing a large, complex operating system such as Windows because it can bundle up all the programs, files, configuration data and so forth that go into installing such an operating system on a PC.

Windows 10 users can visit Microsoft’s Download Windows 10 page to grab its Media Creation Tool (MCT) for Windows 10. Running the MCT offers an option to build a Windows 10 ISO file. This approach works only for current versions of Windows 10, though. If you need something older (or newer, like a Windows Insider ISO) you may want to turn to HeiDoc.net’s Windows ISO Downloader or UUP dump instead. If you want to grab an ISO for Windows 11, you can head to Microsoft’s Download Windows 11 page or UUP dump.

Remember: the ISO you use to perform the repair install must match the version you’re trying to repair. Your running OS can tell you everything you need to know to pick an ISO for an in-place upgrade repair install. See Windows 10 Forums and Windows 11 Forum for details on how to elicit that info.

Once you’ve got the right ISO, you’ll need to do a little prep work before beginning the in-place upgrade process:

- Be sure to log in to Windows with an administrative account.

- If Windows runs on a drive that’s encrypted, you’ll need to suspend or turn off encryption before performing the in-place upgrade install. After the install completes, you can turn it back on again.

- If the target PC runs UEFI (the Unified Extensible Firmware Interface), turn off fast boot and secure boot before starting the in-place upgrade install. Again, you can turn it back on after it’s done.

- Disable or uninstall any third-party antivirus or security software that may be running (anything other than Windows Defender, in other words). Once again, you can reinstall or reenable it once the install is complete.

With that out of the way, running the repair install is dead simple:

- Mount the ISO.

- Navigate to the root of the virtual “CD Drive” into which the ISO’s contents get loaded.

- Run the setup.exe file.

If you’ve got a bootable USB medium (normally a flash drive), you can skip step 1. Open the drive in File Explorer and run setup.exe.

When the Windows installer gets going, accept the license terms, select Upgrade this PC now, allow updates, and click Next. Windows grabs updates, switches over to the installer OS image, and gets itself ready to run. You must then accept the license terms and allow the OS to start the actual in-place upgrade.

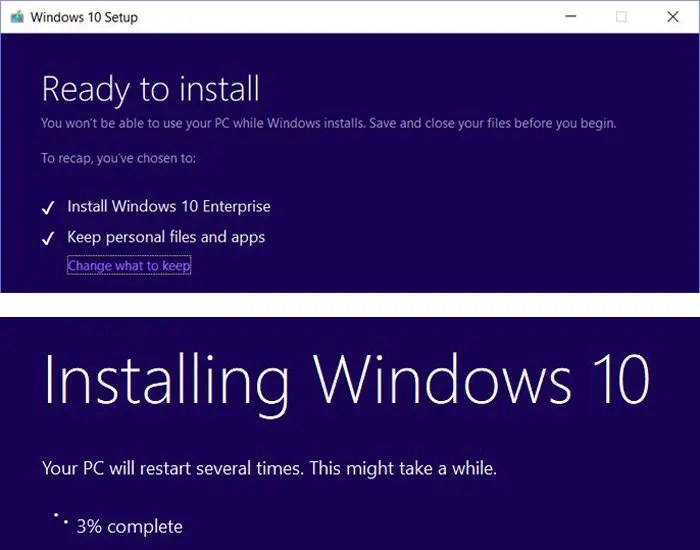

By default, the installer keeps all personal files and apps on the target machine. This is what you want, so there’s no need to dig into the “Change what to keep” item on the “Ready to install” page. Just be sure that both “Install Windows 10” (or “Install Windows 11”) and “Keep personal files and apps” are checked on that screen.

As the in-place upgrade runs, the circular progress indicator shows that it’s upgrading Windows, from 1% to 100%. Figure 3 shows the corresponding Windows 10 screencaps; Windows 11 screens are similar but not identical.

Figure 3: The Windows 10 installer prepares to resinstall the OS (top) and then grinds through the initial installation phase prior to the first reboot (bottom).

Ed Tittel / IDG

After that completes, the installer takes you through some additional setup screens where you have the option to customize settings or take the express route to completion. Once that is complete, you’ll sit through a number of colored screens as the installer puts the finishing touches on your in-place Windows 10 or 11 upgrade.

For the vast majority of PCs, it will take less than 20 minutes for this process to complete. Older, slower PCs may take half an hour or more, but that has not been my experience. This means that when an ISO is available, this process goes much faster than the simpler one-button Windows 11 option described in the previous section. I tend to prefer this for my Windows 11 repairs for that reason and because I keep current ISOs around as a matter of habit and choice.

After the installation

Please remember to check the list of items in need of possible attention and effort when the install is finished, as outlined earlier in the story.

By default, Windows keeps the Windows.old folder around for 10 days after such an install. If you’re sure things are working, you can remove it sooner. On the test Windows 11 PC whose Windows.old folder weighed in at just under 35GB in Figure 1, running Disk Cleanup (cleanmgr.exe) returned nearly 25GB worth of disk space, as you can see in Figure 4. That process took about 5 minutes to complete.

Figure 4: Disk Cleanup offers to recover 24.4GB on the repaired Windows 11 PC.

Ed Tittel / IDG

A strategy for using Windows in-place upgrade installs

Knowing that I can perform an in-place upgrade install quickly and easily has really changed my outlook on Windows troubleshooting. Except for hardware problems (or driver issues, which tie directly into hardware as well), if I find myself spending half an hour troubleshooting a Windows problem, I’m already asking, “Is it time for an in-place upgrade install?” Once that time spent stretches past one hour, there has to be a compelling reason why it’s not a good idea to perform an in-place upgrade install to keep me laboring away at other things.

Simply put, an in-place upgrade install is a great solution for resolving trying or opaque issues with Windows — as long as the target OS is still running well and long enough to run setup.exe through the first of the three or four reboots typical during Windows 10 or 11 installation. If you can make it to the first reboot, the new OS takes over after that anyway, and most problems will be fixed.

Over the past year, I’ve either experienced directly or read about an in-place upgrade install fixing a lengthy laundry list of vexing problems, including these:

- Issues with system fonts, icons, thumbnails, and other presentation matters.

- Networking problems with Wi-Fi and Ethernet, and with misbehaving or absent network interfaces.

- Start and program menu issues, navigation and taskbar problems, and application window issues related to placement and sizing.

- Flaky or erratic behavior from File Explorer, Edge, and UWP apps. Ditto for general OS instability (slow performance, unreliable system utilities, or frequent OS errors).

- Otherwise intractable Windows Update issues (Windows 10 or 11 PCs can’t or won’t download or install updates or feature upgrades).

These days, if a Windows 10 or 11 problem proves hard to diagnose or fix, I’ll turn to an in-place upgrade install as a next or inevitable step in the troubleshooting and repair process. Much of the time, it provides the fix that’s needed. Savvy admins and power users could do worse than give it a try. Cheers!

This article was originally published in March 2018 and updated in November 2024.