As PC, chip, and other component makers unveil products tailored to generative artificial intelligence (genAI) needs on edge devices, users can expect to see far more task automation and copilot-like assistants embedded on desktops and laptops next year.

PC and chip manufacturers — including AMD, Dell, HP, Lenovo, Intel, and Nvidia — have all been touting AI PC innovations to come over the next year or so. Those announcements come during a crucial timeframe for Windows users: Windows 10 will hit its support end of life next October.

Forrester Research defines an AI PC as one that has an embedded AI processor and algorithms specifically designed to improve the experience of AI workloads across the central processing unit (CPU), graphics processing unit (GPU), and neural processing unit, or NPU. (NPUs allow the PCs to run AI algorithms at lightning-fast speeds by offloading specific functions.)

“While employees have run AI on client operating systems (OS) for years — think background blur or noise cancellation — most AI processing still happens within cloud services such as Microsoft Teams,” Forrester explained in a report. “AI PCs are now disrupting the cloud-only AI model to bring that processing to local devices running any OS.”

Forrester has tagged 2025 “the Year of the AI PC” — and if the number of recent product announcements is any indication, that’s likely to be the case.

Gartner Research projects PC shipments will grow by 1.6% in 2024 and by 7.7% in 2025. The biggest growth driver will be due, not the arrival of not AI PCs, but to the need by many companies and users to refresh their computers and move toward Windows 11.

“Our assumption is that [AI PCs] will not drive shipment growth, meaning that most end users won’t replace their PCs because they want to have the AI. They will happen to select [an AI PC] if they will replace their PCs for specific reasons — e.g., OS upgrade, aging PCs, or a new school or job, and most importantly, the price is right for them,” said Gartner analyst Mika Kitagawa.

The biggest impact of AI PCs on the industry will be revenue growth due to changes in the components, such as adding an NPU core and more considerable memory requirements. AI PCs are also likely to boost application service provider and end-user spending. Gartner predicts end-user spending will rise 5.4% this year and jump 11.6% in 2025, a growth rate that will outpace AI PC shipment growth.

“In five years, the [AI PC] will become a standard PC configuration, and the majority of PCs will have an NPU core,” Kitagawa said.

Tom Mainelli, an IDC Research group vice president, noted that across the silicon ecosystem there are already systems-on-chips (SoCs) with NPUs from Apple, AMD, Intel, and Qualcomm. “Apple led the charge with its M Series, which has included an NPU since arriving on the Mac in 2020,” he said.

“To date, neither the operating systems — Windows or macOS — nor the apps people use have really leveraged the NPU,” Mainelli said. “But that is beginning to change, and we will see a big upswing in OSes and apps beginning to leverage the benefits of running AI locally, versus in the cloud, as the installed base of systems continues to grow.”

Windows 11 has several genAI features built in, and Apple is slated to roll out “Apple Intelligence” features next week.

Nvidia chips, particularly the company’s GPUs, are already widely used in PCs. They’re popular for gaming, graphic design, video editing, and machine learning applications. Its GeForce series is especially well-known among gamers, while the Quadro and Tesla series are often used in professional and scientific computing. Many PC builders and gamers choose Nvidia processors for their performance and advanced features such as ray tracing and AI-enhanced graphics.

Nvidia isn’t the only manufacturer trying to get into the AI PC game. Samsung Electronics has started mass production of its most powerful SSD for AI PCs — the PM9E1. Intel earlier this year announced its line of “Ultra” chips, which are also aimed at genAI PC operations, and Lenovo just introduced its “Smarter AI” line of tools that include agents and AI assistants across a number of devices. And AMD has touted new CPUs offering greater processing power tailored for AI operations.

“AMD is aggressively pursuing its strategy of being a full-breadth processor provider with a heavy emphasis on the emerging AI market,” said Jack Gold, principal analyst with tech industry research firm J. Gold Associates. “It is successfully positioning itself as an alternative to both Intel and Nvidia, as well as an innovator in its own right.”

Upcoming genAI features need sophisticated hardware

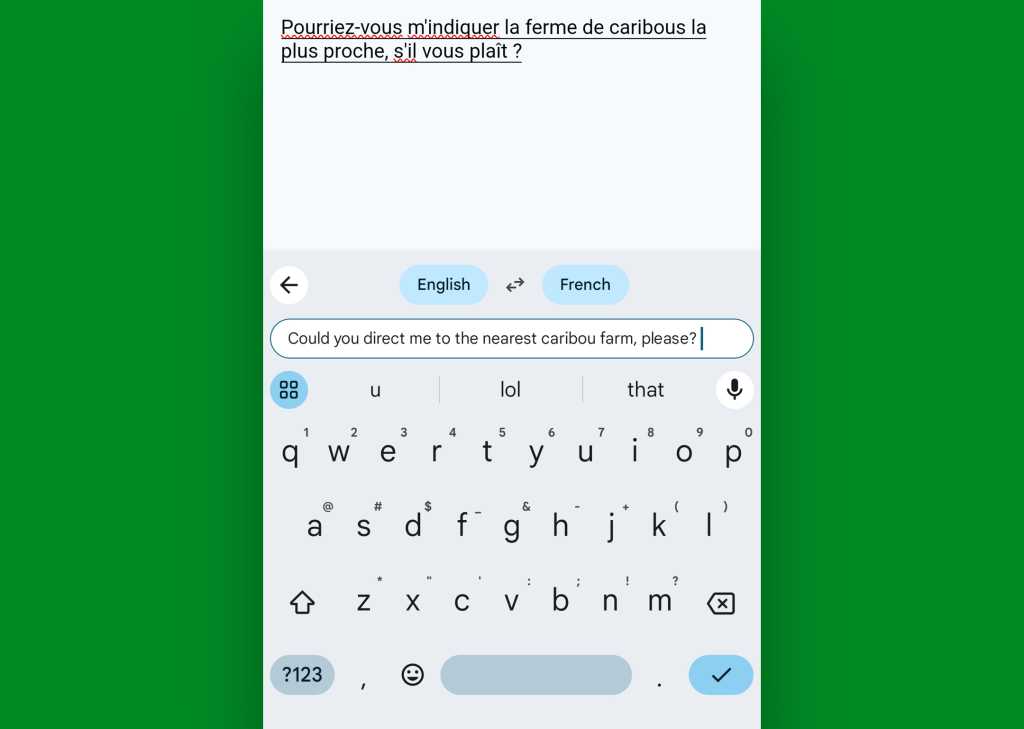

“Leaders foresee many use cases for genAI, from content creation to meeting transcription and code development,” Forrester said in its report. “While corporate-approved genAI apps such as Microsoft Copilot often run as cloud services, running them locally enables them to interact with local hardware, such as cameras and microphones, with less latency.”

For his part, Mainelli will be watching to see how Apple rolls out Apple Intelligence on the Mac — and how users respond to the new features.

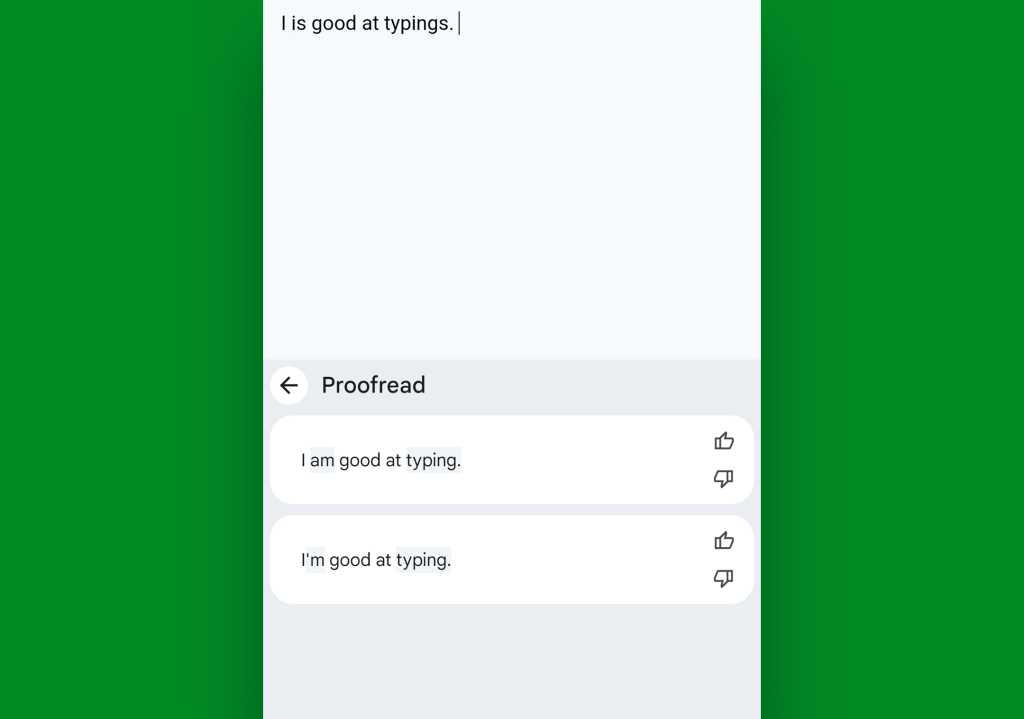

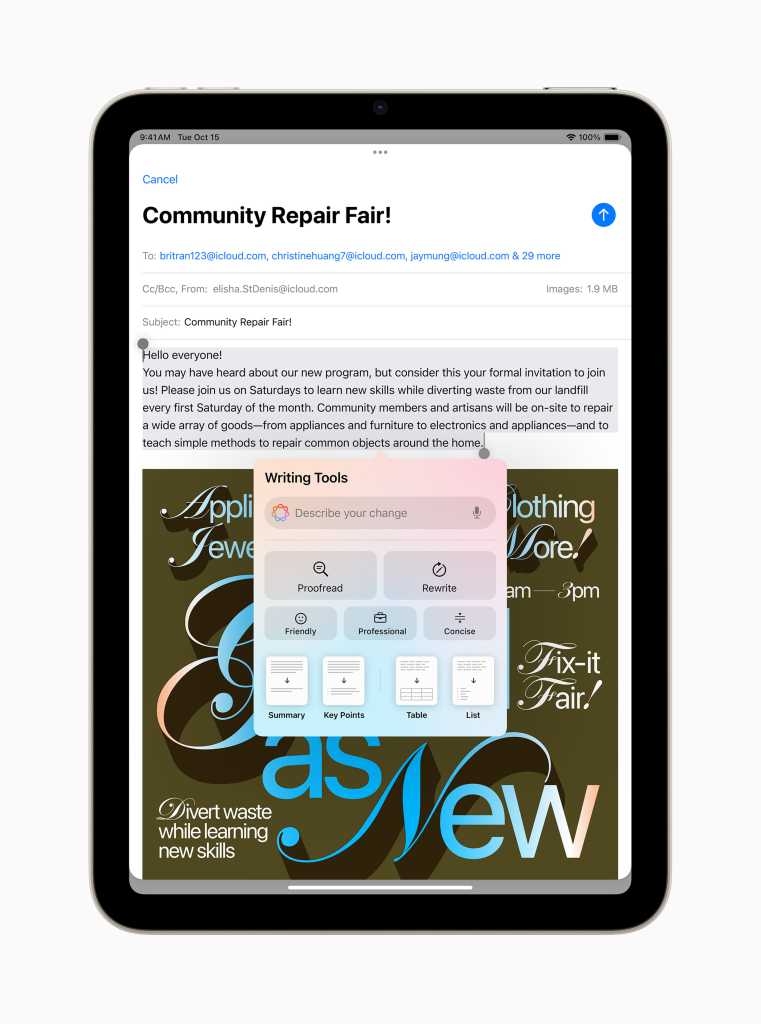

Like Microsoft’s cloud-based Copilot chatbot, Apple Intelligence has automated writing tools including rewrite and proofread functionality. The onboard genAI tools can also generate email and document summaries, pull out key points and lists from an article or document, and generate images through the Image Playground app.

“And by the end of this year, we will see Microsoft’s Copilot+ features land on Intel and AMD systems with newer 40-plus TOPS NPUs in addition to systems already shipping with Qualcomm’s silicon,” Mainelli said.

Independent software vendors (ISVs) will also use AI chips to enable new use cases, especially for creative professionals. For example, Audacity — an open-source music production software company — is working with Intel to deliver AI audio production capabilities for musicians, such as text-to-audio creation, instrument separation, and vocal-to-text transcription.

Dedicated AI chipsets are also expected to improve the performance of classic collaboration features, such as background blur and noise, by sharing resources across CPUs, GPUs, and NPUs.

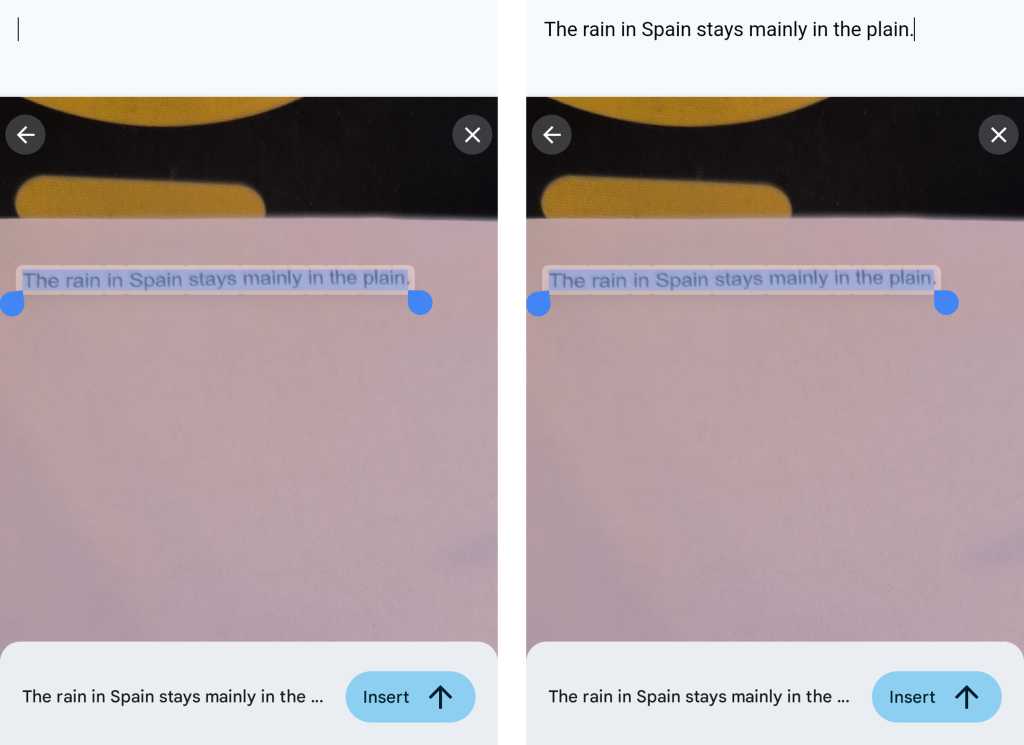

“Upset that your hair never looks right with a blurred background? On-device AI will fix that, rendering a much finer distinction between the subject and the blurred background,” Forrester said. “More importantly, the AI PC will also enable new use cases, such as eye contact correction, portrait blur, auto framing, lighting adjustment, and digital avatars.”

From the cloud to the edge

Experts see AI features and tools moving more to the edge — being embedded on smartphones, laptops and IoT devices — because AI computation is done near the user at the edge of the network, close to where the data is located, rather than centrally in a cloud computing facility or private data center. That means less lag time and better security.

Lenovo, for example, just released AI Now, an AI agent that leverages a local Large Language Model (LLM) built on Meta’s Llama 3, enabling a chatbot that’s able to run on PCs locally without an internet connection. And just last month, HP announced two AI PCs: theOmniBook Ultra Flip 2-in-1 laptop and the HP EliteBook X 14-inch Next-Gen AI laptop. The two PCs come with three engines (CPU, GPU, and NPU) to accelerate AI applications and include either an Intel Core Ultra processor with a dedicated AI engine or an AMD Ryzen PRO NPU processor enabling up to 55 TOPS (tera operations per second) performance.

The HP laptops come with features such as a 9-megapixel AI-enhanced webcam for more accurate presence detection and adaptive dimming, auto audio tuning with AI noise reduction, HP Dynamic Voice Leveling to optimize voice clarity, and AI-enhanced security features.

AI could do for productivity what search engines like Google once did for finding content online changed, according to Gold. “With AI and neural processing units, mundane tasks will get easier, with things like trying to find that email or document I remember creating but can’t figure out where it is,” he said. “It will also make things like videoconferencing much more intuitive and useful as it takes out many of the ‘settings’ we now have to do.”

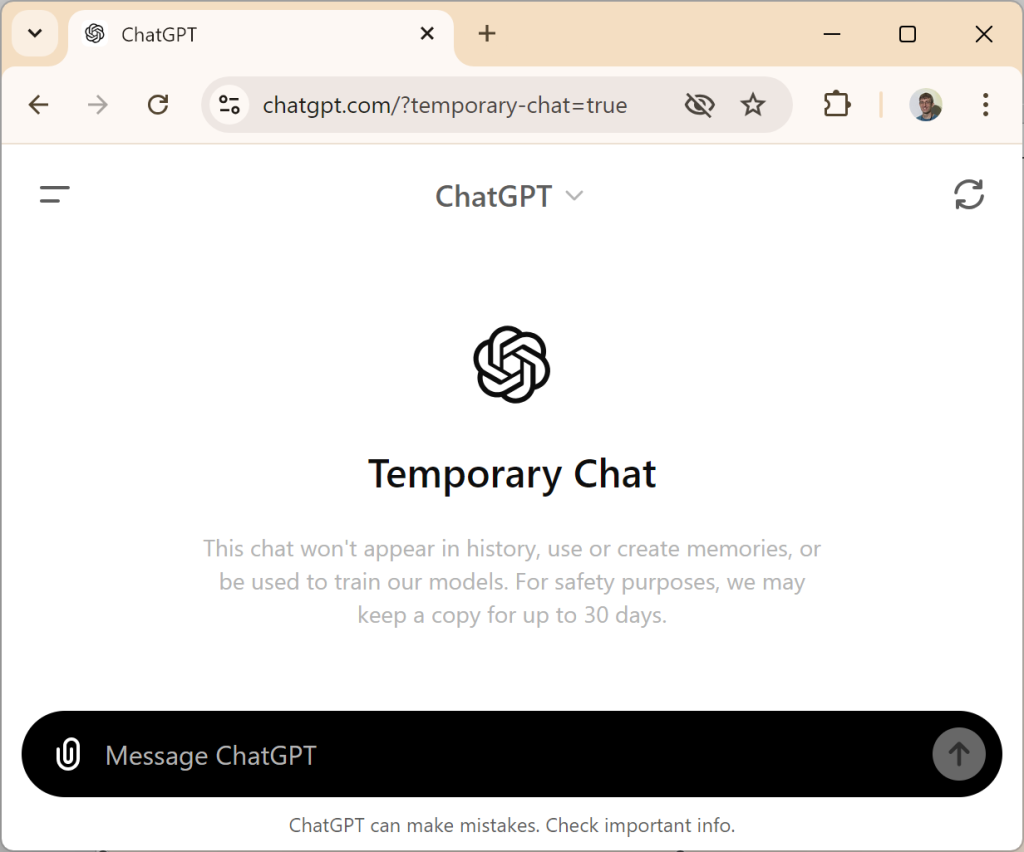

With the arrival of specific AI “agents,” PC users will soon have the ability to have tasks done for them automatically because the operating system will be much smarter with AI assistance. “[That means] I don’t have to try and find that setting hidden six layers below the OS screen,” Gold said. “In theory, security could also get better as we watch and learn about malware practices and phishing.

“And we can use AI to help with simple tasks like writing better or summarizing the 200 emails I got yesterday I haven’t had time to read,” he said.

Apple, Samsung, and other smartphone and silicon manufacturers are rolling out AI capabilities on their hardware, fundamentally changing the way users interact with edge devices like smartphones, tablets, and laptops.

“While I am very excited to see how the OS vendors and software developers add AI features to existing apps and features, I’m most excited to see how they leverage local AI features to evolve how we interact with our devices and bring to market new experiences that we didn’t know we needed,” Mainelli said.

But caution remains the watchword

Gold cautioned there are also downsides to the sudden arrival of genAI and how quickly the technology continues to evolve.

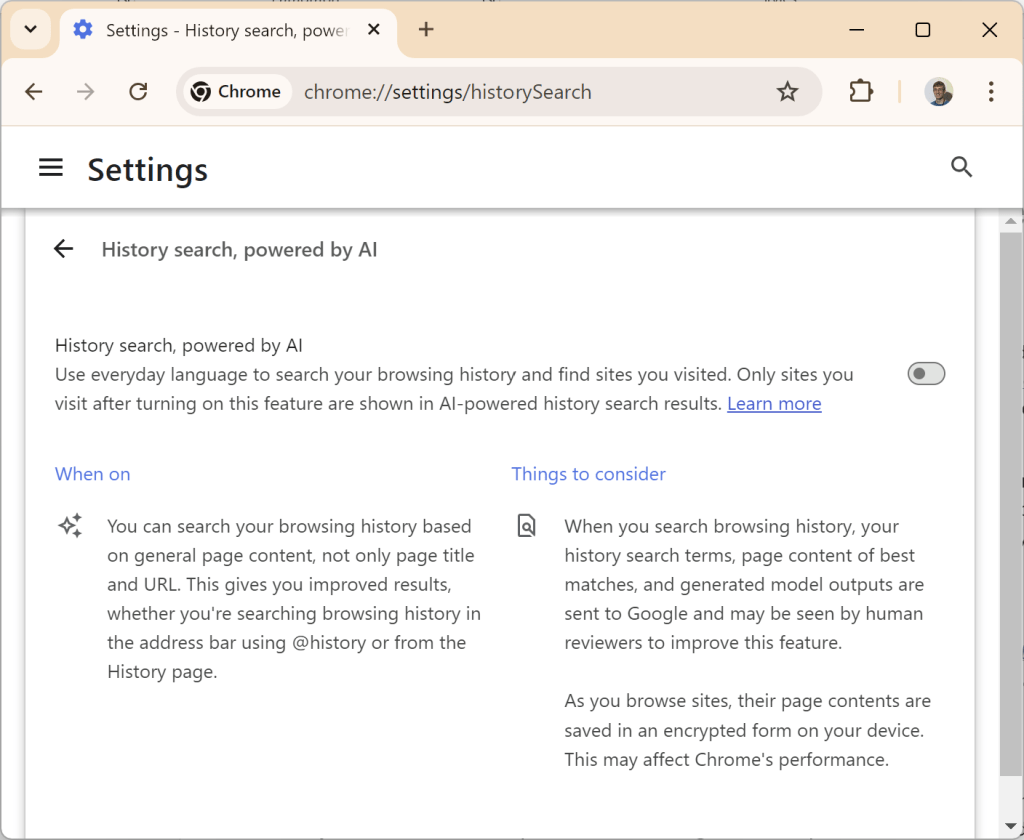

“With AI looking at or recording everything that we do, is there a privacy concern? And if the data is stored, who has access?” he said. “This is the issue with Microsoft Recall, and it’s very concerning to track everything I do in a database that could be exposed. It also means that if we rely on AI, how do we know the models were properly trained and not making some critical mistakes?”

AI errors and hallucinations remain commonplace, meaning users will have to deal with what corporate IT has been wrestling with for two years.

“On-device generative AI could be susceptible to the same issues we encounter with today’s cloud-based generative AI,” Mainelli said. “Consumer and commercial users will need to weigh the risks of errors and hallucinations against the potential productivity benefits.”

“From the hardware side, more complex processing means we’ll have more ways for the processors to have faults,” Gold added. “This is not just about AI, but as you increase complexity, the chances of something going wrong gets higher. There is also an issue of compatibility. Do software vendors have programs specific to Intel, AMD, Nvidia, Arm? In all likelihood, at least for now, yes they do.”

As genAI tools and features increase, the level of software support will also have to grow — and companies will need to face the possibility of compatibility issues. AI features will also take a lot of processing power, and it’s not clear how heavy use of it on PCs and other devices might affect battery life, Gold noted.

“If I’m doing heavy AI workloads, what does that do to battery life — much like heavy graphics workloads affect battery life dramatically,” he said.

Traditional on-device security might not be able to prevent attacks that target AI applications and tools, which could result in data privacy vulnerabilities. Those cyberattacks can come in a variety of forms: prompt injection, AI model tampering, knowledge base poisoning, data exfiltration, personal data exposure, local file vulnerability, and even the malicious manipulation of specific AI apps.

“Regarding security, AI indeed represents some risk (as is true with any new technology),” Mainelli said, “but I expect it to be mostly positive when it comes to securing the PC. By leveraging the low-power NPU to run security persistently and pervasively on the system, security vendors should be able to use AI to make security less intrusive and bothersome to the end user, which means they’ll be less likely to try to circumvent it.”