The built-in Windows Reliability Monitor remains an oft-overlooked troubleshooting gem. It’s actually a specialized part of Windows’ general-purpose Performance Monitor tool (perfmon.exe). While more limited in scope and capability, Reliability Monitor (a.k.a. ReliMon) is much, much easier to use.

Reliability Monitor zeroes in on and tracks a limited set of errors and changes on Windows 10 and 11 desktops (and earlier versions going back to Windows Vista), offering immediate diagnostic information to administrators and power users trying to puzzle their way through crashes, failures, hiccups, and more.

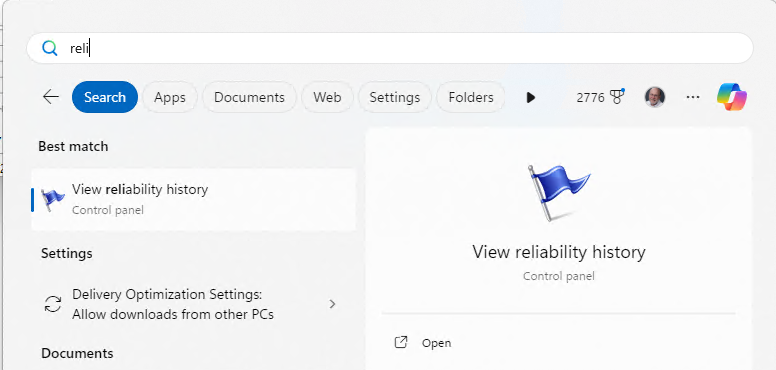

Launch Reliability Monitor

There are many ways to get to Reliability Monitor in Windows 10 and 11. At the Windows search box, if you type reli you’ll usually see an entry that reads View reliability history pop up on the Start menu in response. Click that to open the Reliability Monitor application window.

You can also click Start > Settings, then type reli into that search box for the same menu option, shown in Figure 1.

Figure 1: Type “reli” into the Start menu, and you’ll see “View reliability history” to the right.

Ed Tittel / IDG

To navigate to this item in the Control Panel hierarchy, follow this sequence of selections: Start > Control Panel > Security and Maintenance > View reliability history (under the Maintenance heading). Yet another method of launching ReliMon is to press Win key + R to open the Run box, then type in perfmon /rel. That same command works from any Windows command-line interface.

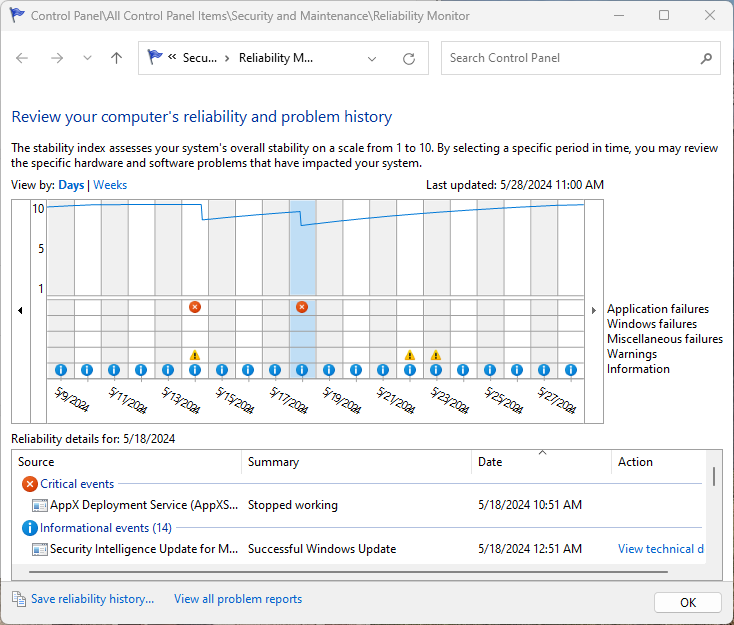

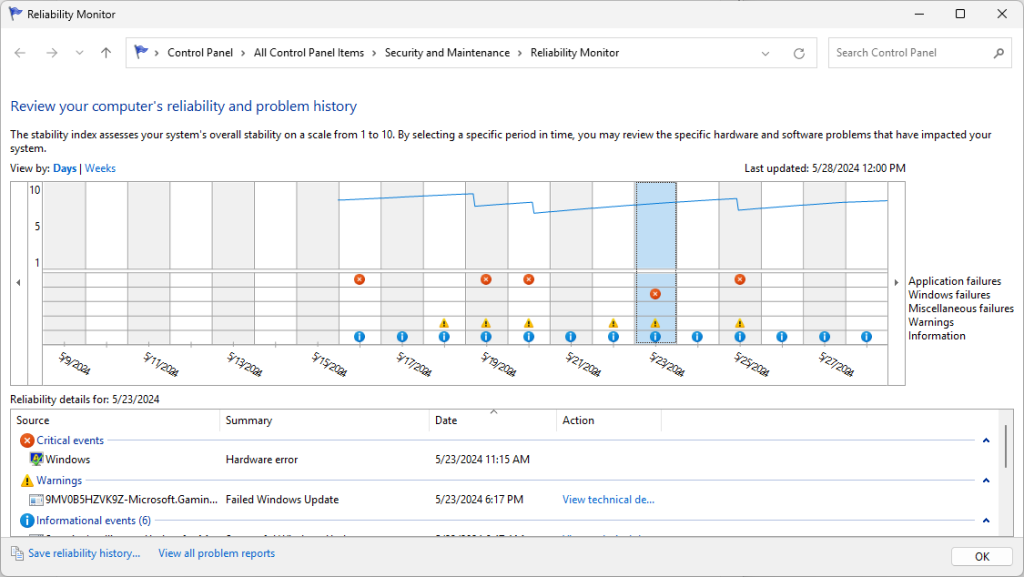

However you invoke Reliability Monitor, you’ll find it has useful things to tell you. Figure 2 shows the main ReliMon screen tracking the reliability of my Windows 11 production PC from May 9 through May 28. It shows a near-optimal stability index of 10 at the left-hand side, errors (denoted by a red circle with a white X) on May 14 and 18, and a climb back to a “perfect 10” at the right.

Figure 2: The main ReliMon window, which traces reliability on a scale of 1 (bottom) to 10 (top) from May 9 through May 28. Note the error markers on May 14 and 18.

Ed Tittel / IDG

Look to the right of the timeline, which labels the rows in the table underneath the stability graph. They read:

- Application failures: Provides timestamps and additional info about application or app crashes, hangs, and other issues, of which “stopped working” is most typical.

- Windows failures: Indicates Windows OS or hardware errors that cause crashes, hangs, BSODs, and other issues. You can see a “Windows hardware error” in Figure 3 below (turns out to be graphics driver related).

- Miscellaneous failures: Failures or crashes that fall outside the realm of apps, applications and the OS — usually something bus or peripheral related. In addition, a “shut down unexpectedly” or “not properly shut down” item is recorded when Windows 10 or 11 hangs and you cycle power to restart the OS. This also counts as a miscellaneous failure.

- Warnings: Warnings and Information items both usually relate to updates applied to the current host, through Windows Update, the Microsoft Store, and so forth. Warnings usually document failed or incomplete updates.

- Information: Related to updates from various sources successfully applied to the current host.

Next up is a somewhat more error-prone PC, a Lenovo ThinkPad X380 Yoga, to demonstrate what such a sequence looks like in ReliMon (see Figure 3). You’ll see Windows errors occurring on five days in a 10-day stretch, from May 16 through May 25. Except for the hardware error shown on May 23, the rest of the errors come from the built-in Windows facilities and applications that include the AppX Distribution Service, Teams, Phone Link, and so forth.

Figure 3: The highlighted item (May 23) shows a Windows hardware error; other errors occurred on May 16, 19, 20, and 25. Ouch!

Ed Tittel / IDG

What Reliability Monitor can tell you about critical errors

By clicking on a specific day in the timeline (shown as a vertical blue bar for May 23 in Figure 3), you can see events reported for that day in a list below the timeline. Double-click any item in the list to pop up a detail pane with more information.

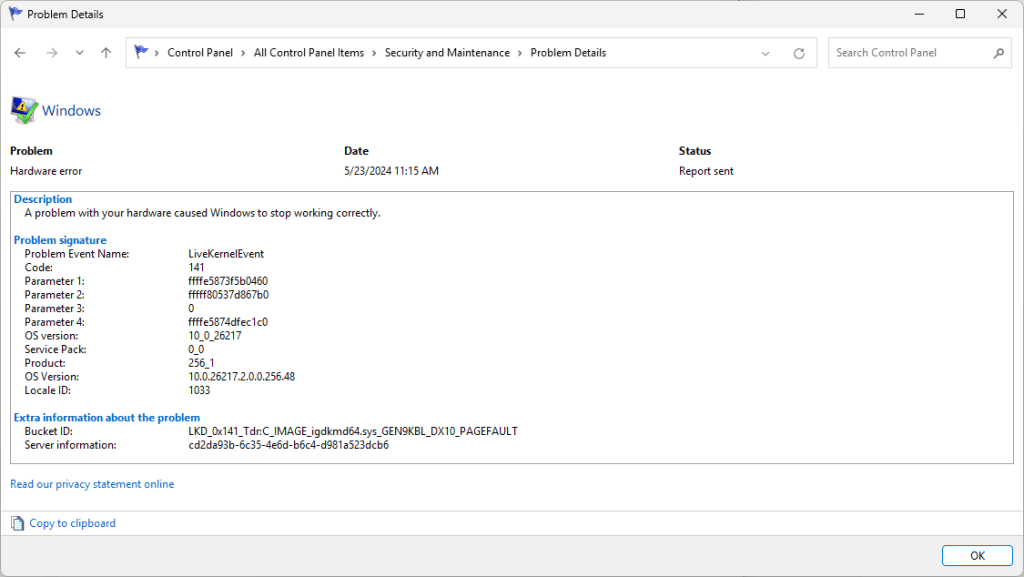

After clicking May 23 in Figure 3, I double-clicked the entry labeled “Windows” with a summary that reads “Hardware error,” which brought up the Problem Details screen shown in Figure 4.

Figure 4: For this somewhat generic “Hardware error,” the most useful info appears in the Bucket ID line. More often, the Problem Event Name and Code fields lead directly to good info.

Ed Tittel / IDG

The bucket ID from which the error originates (shown under “Extra information about the problem” in Figure 4) includes the string igkdmd64.sys. A quick Google search confirms this is the Windows driver for Intel Graphics Kernel Mode (acronym: igkd). Thus, it’s pretty obvious that the Intel graphics module on the Lenovo ThinkPad X380 Yoga experienced a hiccup in the built-in Intel UHD Graphics 620 on its i7-8650U CPU. You can usually find fair-to-good guidance on problem information by visiting answers.microsoft.com and searching on problem event names, bucket IDs, and so forth.

What kinds of problems can ReliMon diagnose?

Knowing the source of failures can help you take action to prevent them. For example, certain critical events show APPCRASH as the Problem Event Name. This signals that some Windows app or application has experienced a failure sufficient to make it shut itself down. Such events are typically internal to an app, often requiring a fix from its developer. Thus, if I see a Microsoft Store app that I seldom or never use throwing crashes, I’ll uninstall that app so it won’t crash any more. This keeps the Reliability Index up at no functional cost (since I don’t use the app anyway).

The same approach works for update checkers of many kinds. (I prefer to update manually, or to use an update tool such as the Microsoft Winget package manager in PowerShell or at a Command Prompt.) As it turns out, Reliability Monitor is a great tool for catching and stopping updaters that one may have tried but failed to block through the Startup tab in Task Manager. I’ve used it to detect updaters for the Intel Driver & Support Assistant, CCleaner, MiniTool Partition Wizard, anti-malware packages, Office plug-ins, Java, and lots of other stuff. In many such cases, I decided to remove them (by uninstalling them or renaming their .exe files) because (a) I didn’t need or use them and (b) I wanted to remove a source of Windows errors.

Recently, I also found myself facing a “black screen with cursor” on a Lenovo ThinkPad P16. By using two keyboard sequences (Win key + Ctrl + Shift + B to restart the graphics driver, then Ctrl + Alt + Del to access the Windows “master control menu”), I regained control of the PC. A quick trip into Reliability Monitor showed me an error with this telltale string in the bucket ID information: “CreateBlackScreenLiveDump.” That’s a clear indication that something went wrong with the graphics driver, as was the system’s recovery after I entered the “reset graphics” key combo. I ended up reverting to the previous NVIDIA graphics driver to fix the problem.

Where ReliMon is less helpful

Sometimes, you’ll find that error sources are either applications you need or want to run, or they originate from OS components and executables. Uninstalling such things is not an option, and may not only be unproductive but render the OS inoperable. When that kind of thing pops up — and it often does — all you can do is report the issue via the Microsoft Feedback Hub, include the Reliability Monitor detail as an attachment, and hope that Microsoft gets around to fixing whatever’s broken sooner rather than later.

Here’s an example of such stuff from across my dozen or so Windows 11 PCs and VMs from the last 30 days (all show “Stopped working” or “Stopped responding and was closed” in the summary field in ReliMon):

- AppX Deployment Service

- Intel System Usage Report

- Microsoft Phone Link

- Microsoft Teams Updater

- Windows Biometric Service

- Windows Camera Frame Server

- Windows Explorer

Nearly every item (except the one labeled “Intel…”) is an OS element that is required to keep Windows working. Thus, getting rid of them is not an option. Reporting them via Feedback Hub is the only responsible thing to do.

Add ReliMon to your troubleshooting toolkit

I hope I’ve shown that Reliability Monitor can be a useful and informative member of any Windows professional’s troubleshooting toolkit, both for Windows 10 and Windows 11. I check in on it no less than monthly on the machines that I manage, even when nobody’s complaining about something odd, slow, or broken. And when such complaints do come in, it’s one of the first tools I check to try to figure out and fix what’s causing trouble. I recommend you do likewise.

This article was originally published in October 2020 and most recently updated in June 2024.