Independent experts have urged businesses to think carefully before relying on third party support for security patches once Windows 10 reaches its end of life in October 2025.

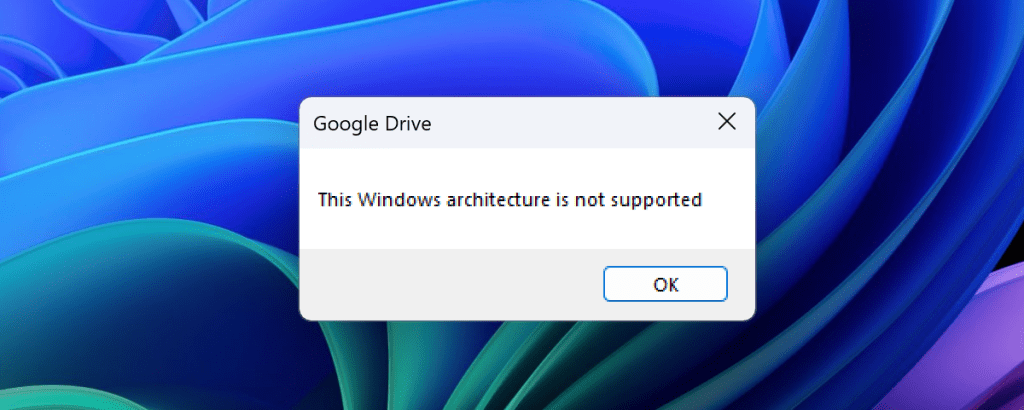

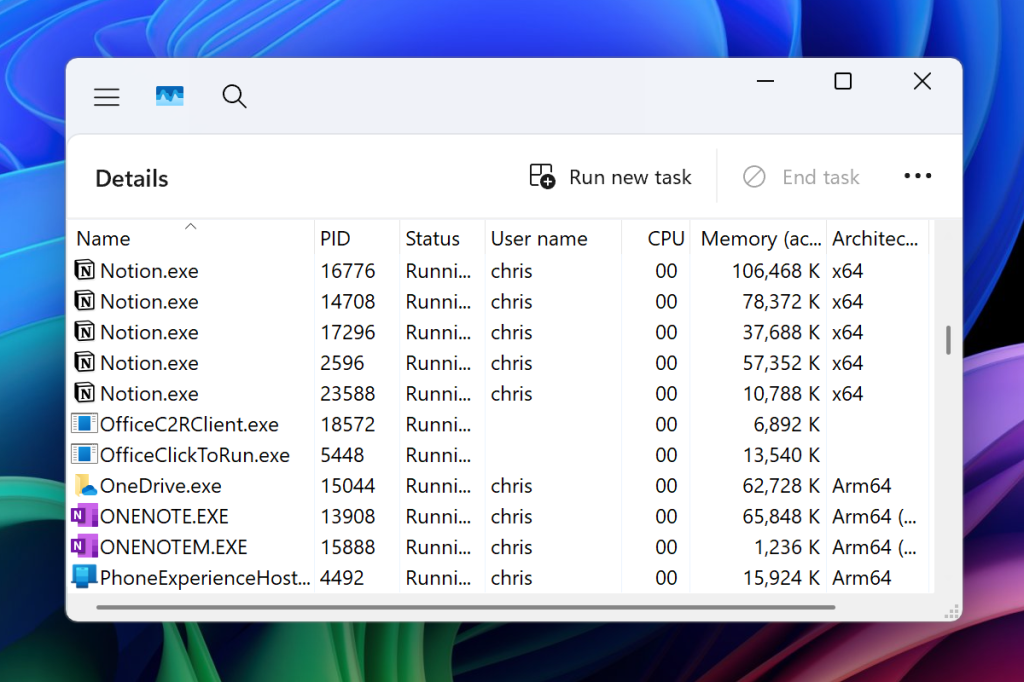

Upgrading from Windows 10 may be challenging for some businesses because many older PCs may not meet the minimum system requirements for Windows 11. Some software or applications may not be compatible with Windows 11, forcing users to stick with Windows 10 or find alternatives.

In addition, point-of-sale (POS) terminals running Windows 10 may be difficult to upgrade, presenting a particular challenge for IT professionals in the retail and hospitality sectors.

As with the retirement of previous versions of Windows, Microsoft is offering enterprises extended support for Windows 10. For commercial customers and small businesses this comes in at $61 per device in the first year, doubling to $122 per Windows 10 device in year two and $244 per device for the third and final year.

Organisations using cloud-based update management enjoy cost savings, with prices of $45 per user with up to five devices in the first year.

There’s a big discount for educational institutions that can get extended support for a total of $7 over the maximum of three years.

Microsoft’s Extended Security Updates offers monthly critical and important security updates to Windows 10 but without access to any new features and only for up to three years.

Micro-patching alternative

Acros, a Slovenian company specialising in security updates, announced Wednesday that it will offer enterprise users of Windows 10 extended support under its 0patch brand for up to five years at a lower cost than Microsoft.

For medium and large organizations, 0patch Enterprise includes central management, multiple users and roles, and comes in at €34.95 (around $38) per device per year, excluding tax. A cut down version, pitched at small business and individuals, 0patch Pro, costs €24.95 plus tax per device per year.

0patch uses a system of “micro-patches” to address critical vulnerabilities, an approach touted as faster and offering a lower potential for system instability. The vendor has previously offered extended support for Windows 7 and Windows 8.

The company said it may offer fixes for vulnerabilities that Microsoft leaves unpatched while also providing patches for non-Microsoft products (such as Java runtime, Adobe Reader etc.), as explained in a blog post.

Gauging risk to reward

Rich Gibbons, head of market development at IT asset management specialist Synyega, noted that third-party support is an established part of the enterprise software market.

“Businesses regularly bring in third parties to help patch and maintain their legacy Oracle, SAP and IBM estates, and while it’s not as common with Microsoft, it’s still a legitimate option, and one worth assessing,” Gibbons said.

“Purchasing extended support packages from Microsoft is expensive and will only go up in price each year. It’s therefore little wonder that more cost-effective options like those offered by 0patch are beginning to gain traction,” Gibbons added

He advised companies to conduct a full risk-reward analysis to understand if the cost savings are worth selecting alternatives like 0patch rather than purchasing extended support from Microsoft or biting the bullet and upgrading their systems.

Leaving Microsoft’s ecosystem

Javvad Malik, lead security awareness advocate at KnowBe4, also urged companies to be careful about opting for third-party support rather than facing the financial and operational burdens of a significant overhaul.

“The viability of turning to a third party for extended support, as opposed to embarking on the arguably Herculean task of retooling apps and refreshing hardware to embrace Windows 11, is, on the surface, an attractive proposition,” Malik told Computerworld. “However, engaging with a third party for security patches introduces a layer of dependency beyond the control of Microsoft’s established ecosystem.”

Malik warned that relying on extended support for an extended period might make it more difficult to upgrade in the future.

“Upgrading from one version to the next is relatively simple when considering upgrading two or more versions up from the current version of any software. So, the cost of delaying an upgrade needs to be evaluated in totality, and not just as a comparison to an upgrade today,” Malik advised.

In response to this criticism, 0patch co-founder Mitja Kolsek told Computerworld that deferring a costly Windows upgrade can be beneficial whilst admitting that enterprises have to move on eventually.

“While an upgrade may eventually be inevitable for functional and compatibility reasons, we’re making sure that you’re not forced to upgrade because of security flaws that the vendor won’t fix anymore,” Kolsek explained. “At the same time, five years is a long time and a lot can happen — maybe you’ll be able to skip a version, or start using some other tool altogether.”