Pixel phones are filled with efficiency-enhancing Google intelligence, and one area that’s all too easy to overlook is the way the devices can improve the act of actually talking on your cellular telephone. That’s true for any Pixel model, no matter how old it may be, and it’s especially true for the new Pixel 9 series of phones that launched this past week.

Talking on your phone, you say? What is this, 1987?! Believe me, I get it, Vanilli. We’re all perpetually busy beings these days, and the timeless art of speaking to another human on your mobile device can seem both archaic and annoying.

But hear me out: Here in the real world, placing or accepting (or maybe even just avoiding) a good old-fashioned phone call is occasionally inescapable. That’s especially true in the world of business, but it’s also apparent in other areas of life — from dialing up a restaurant to confirm your carnitas are ready to dodging your Uncle Ned’s quarterly regards-sending check-ins. (No offense, Ned. I never dodge your calls. Really. Send my regards to Aunt Agnes.)

Whatever the case may be, your trusty Pixel can make the process of dealing with a call easier, more efficient, and infinitely less irksome, and you don’t need the shiny new Pixel 9 to appreciate any of these advantages. (The only exception is the new incoming Call Notes feature launching exclusively on the Pixel 9, but since it isn’t available to anyone just yet, we won’t get into it here.)

As a special supplement to my Pixel Academy e-course — a totally free seven-day email adventure that helps you uncover tons of next-level Pixel treasures — I’d like to share some of my favorite hidden Pixel calling possibilities with you, my fellow Pixel-appreciating platypus. Check ’em out, try ’em out, and then come sign up for Pixel Academy for even more super-practical Pixel awesomeness.

Pixel calling trick #1: The call sound sharpener

We’ll start with something critical to the act of communicating via voice from your favorite Pixel device — ’cause if you’re dialing digits and preparing to (gasp!) speak to another sentient creature, the last thing you want to do is struggle to hear the person clearly.

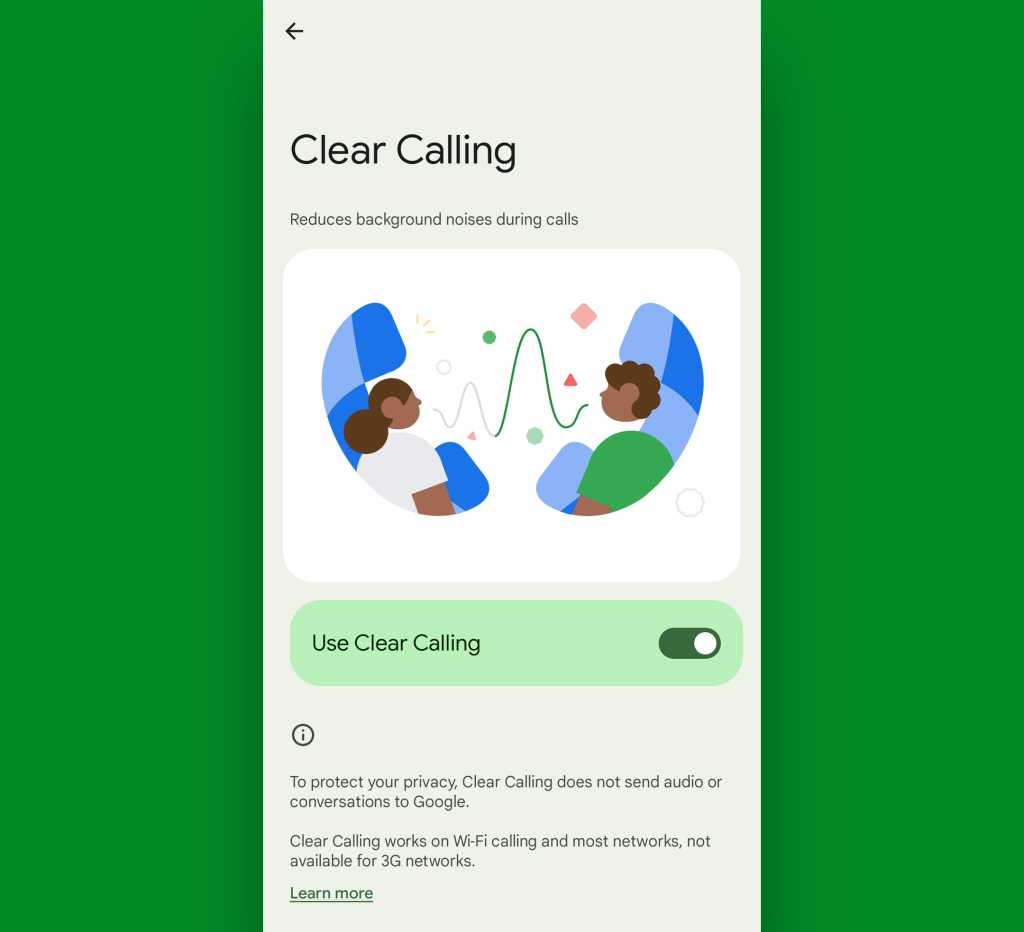

Since 2022’s Pixel 7 series, all Pixels have offered a helpful option called Clear Calling that you’d be crazy not to enable. In short, it’s like noise cancellation for the human voice: It actively reduces the background noise when the person you’re talking to is in a loud environment (as people on cell phones always seem to be). And it genuinely does make a meaningful, noticeable difference.

Best of all? All you’ve gotta do is dig around a bit once to make sure the system is enabled:

- First, open up your Pixel’s system settings.

- Tap “Sound & vibration,” then scroll down and look for the line that says “Clear Calling.” Again, it should be present on any Pixel model from the Pixel 7 onward.

- Tap that, then confirm that the toggle on the screen that comes up next is in the on and active position.

JR Raphael, IDG

And that’s it! All your compatible calls will now have Clear Calling enhancements in place, and you’ll be able to hear what any comrades, colleagues, and/or caribous you communicate are saying more easily than ever.

Not bad, right? And we’re just getting started.

Pixel calling trick #2: The hassle-free holder

The worst part of phone calls, without a doubt, is having to hold. It’s the time-tested way for some of the world’s most sadistic companies to waste precious moments in your day, test what little patience you have left, and summon the pink-speckled rage-demon that lives deep within your brain.

Enter one of the Pixel’s best-kept secrets: With your Google-made gadget in hand (or fin — no offense intended to any aquatic readers out there), you’ll never have to hold again.

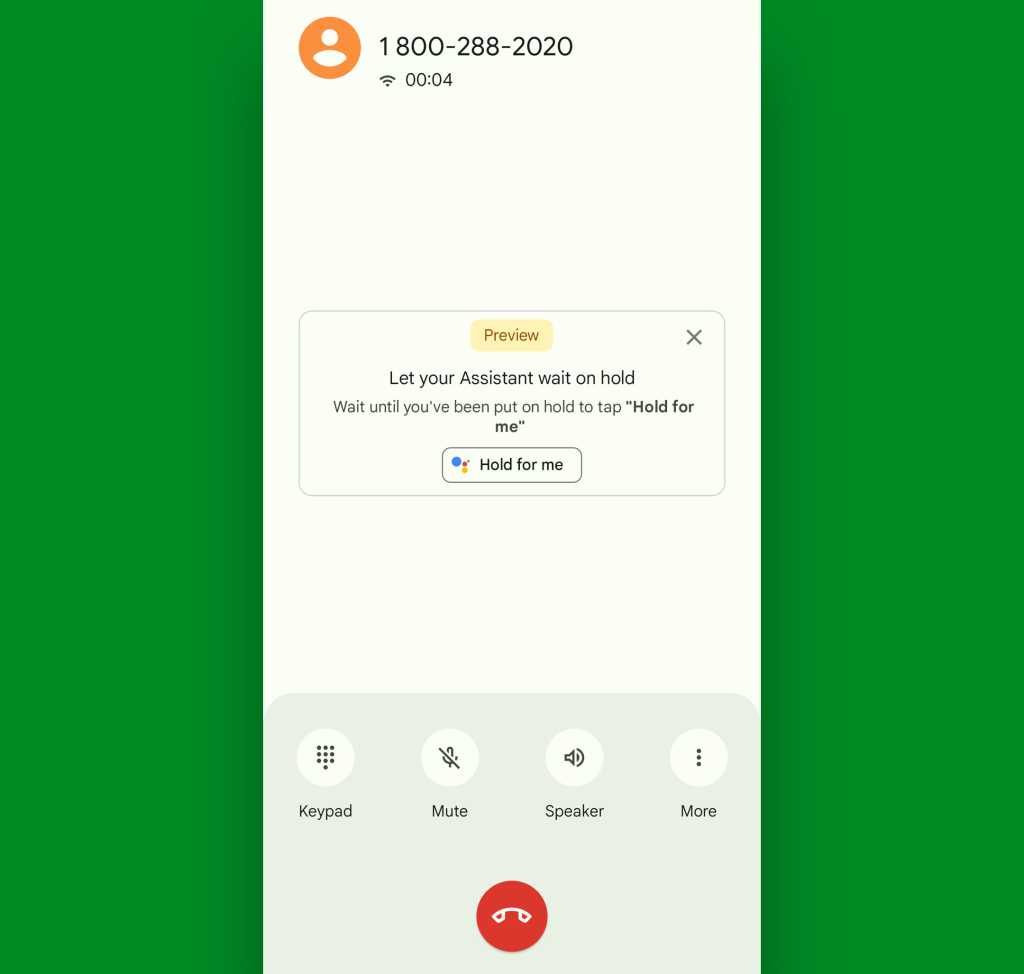

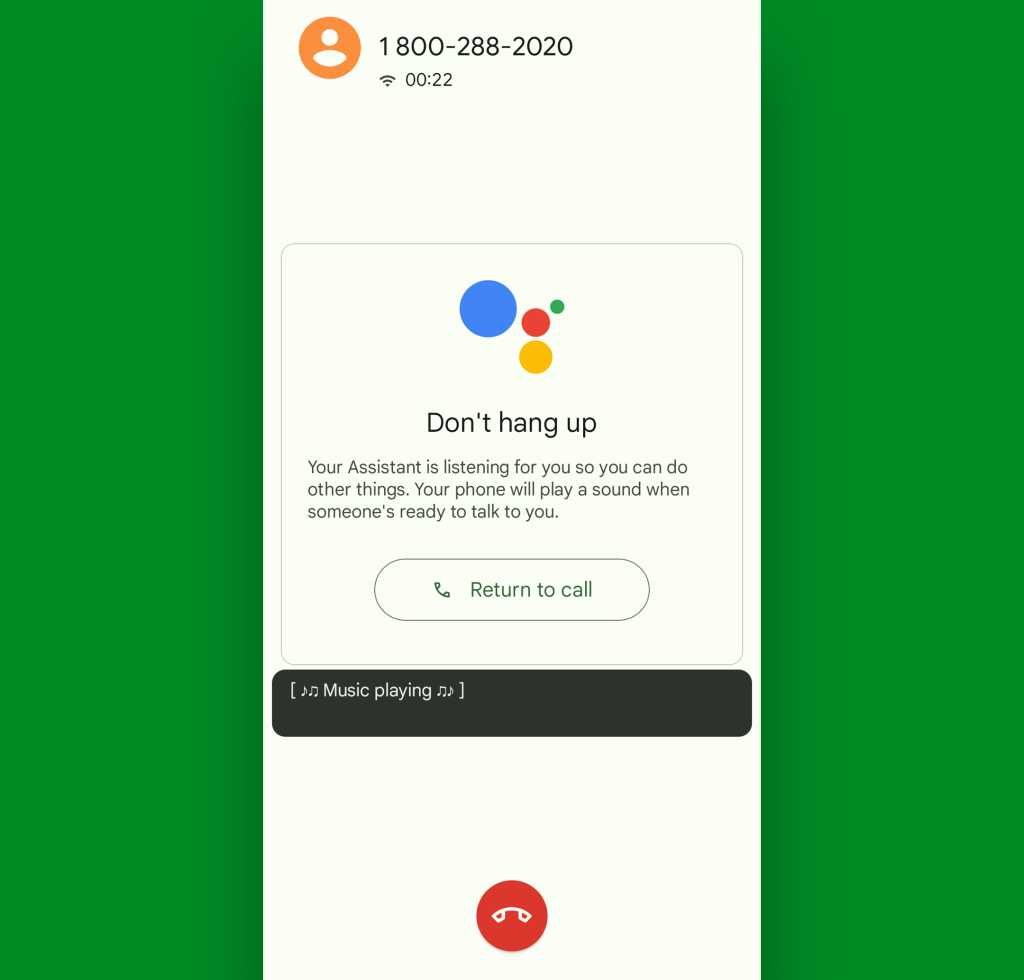

Open up the Phone app, tap the three-dot menu icon in its upper-right corner, and select “Settings” from the menu that pops up. Look for the section labeled “Hold for Me.” It’s present on the Pixel 3 and higher, and it’s presently available in the US, the UK, Canada, Australia, and Japan (sorry, other international pals!) — mostly for English-speaking Pixel owners, though also for Japanese in Japan.

If you meet those conditions, tap that line, then make sure the feature is actually active by flipping the toggle into the on position on the screen that comes up. It’s typically off by default on most new Pixels, so you will need to make sure you take this step anytime you reset your phone or move into a new device.

Then just call any crappy company you like (or don’t like, to be more accurate) and look for the handy new Hold for Me option on your screen once the call is underway and your brightly colored rage beast starts polka-dancing around your cranium. As long as the call involves a toll-free number, your fancy new sanity-saving button should show up and be ready for vigorous pressing.

JR Raphael, IDG

The second the company places you on an eternal hold, just smash that button, utter a few choice curse words, for good measure, and then just go about your business without having to listen to the sounds of smooth jazz and endless reassurances that your call is, like, totally important to them (and will be answered — let’s all say it together now — in the order it was received).

JR Raphael, IDG

As soon as an actual (alleged) human comes on the line, your Pixel will alert you. At that point, unfortunately, the displeasure of dealing with said company is back on your shoulders. But being able to skip the 42-minute build-up to that point is a pretty powerful perk and one you won’t find on any other type of device.

Pixel calling trick #3: The irritation estimation station

Having your Pixel hold for you is fantastic and all, but what if you could avoid putting yourself in a position where you even have to hold in the first place?

Well, hold the phone, my friend — ’cause your Pixel can help with that, too.

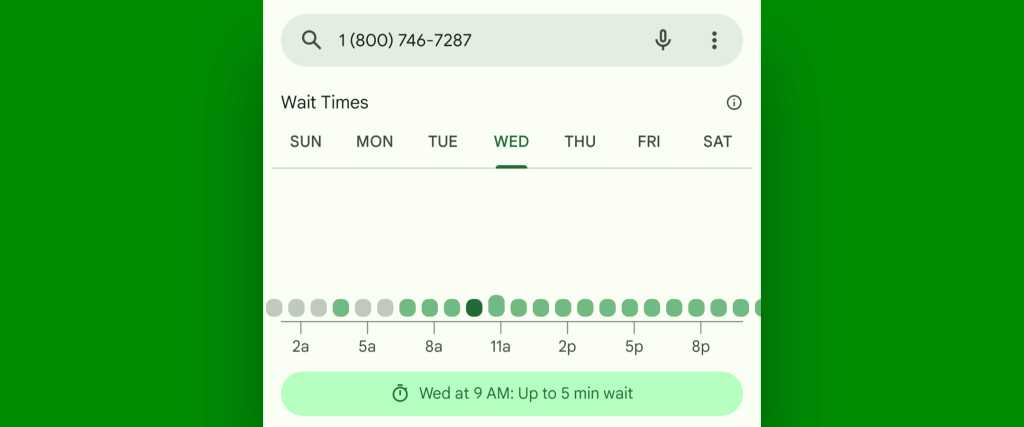

In what may be the most hidden of all hidden Pixel calling perks, the Pixel Phone app has the incredibly cool ability to tell you how busy any given business number is likely to be before you even place the call.

This one happens automatically, whenever relevant info is available, so you mostly just need to know it exists and then keep an eye out for it when the right situation arises.

The way it works is this: When you open up your Pixel’s Phone app and start typing out a number in the dialer, your Pixel will automatically match those digits with its knowledge of typical activity for that business at different times of day and the subsequent likely time you’re bound to wait depending on when you’re calling. As soon as you finish typing in the number, that info will appear on your screen if it’s available — like so:

JR Raphael, IDG

Now, that’s some intel I will happily accept!

What’s especially useful about this setup is that in addition to seeing the expected wait time for your call in the current moment, you can tap any other day or time to compare and see if things might be at least a little less bad at some point in the future.

Yes, please — and thank you.

Pixel calling trick #4: The menu maze skipper

Holding aside, one of the most irritating parts of calling a company is navigating your way through those blasted phone tree menus. You know the drill, right?

- For store hours and information, press 1.

- For directions to the nearest location, press 2.

- For a test of your sanity, continue to listen to these choices.

- For the sound of sea cucumbers, press 4.

- For the option you actually want to select, prepare to wait through at least 14 more annoying options.

Yeaaaaaaaaah. Not a great use of anyone’s time (though, to be fair, a great way to test your ability to get angry).

Well, my Pixel-palmin’ pal, your Googley gadget’s got your back. But it’s on you to enable the associated feature and make sure it’s ready to save you from your next visit to the haunted phone tree forest.

Here’s how:

- Open up that fancy Phone app of yours.

- Once again, tap the three-dot menu icon and select “Settings.”

- Look for the “Direct My Call” option and smack it with your favorite fingie.

- Flip all the toggles on the screen that comes up into the on position. (Again, they’re usually off by default with any new or freshly reset Pixel phone.)

- Flip a pancake in a griddle and then eat it with gusto.*

* Gusto-filled pancake consumption is optional but highly recommended.

This one’s available on the Pixel 3a and higher and only in the US and UK and in English, by the way. Insert the requisite grumbling on behalf of all other Pixel owners here.

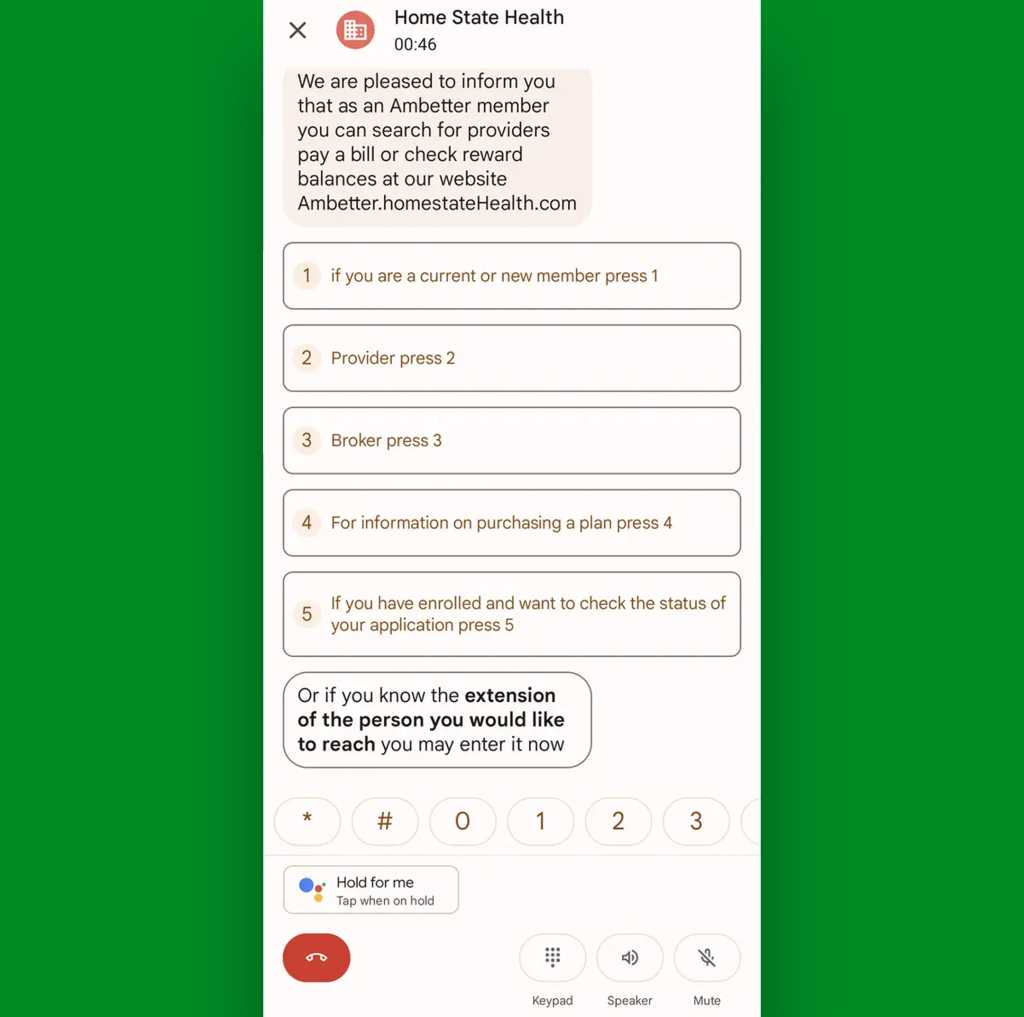

So long as you can get the option enabled, though, just look for the Direct My Call button at the top of the screen after you’ve made a call to a company that clearly hates you. Tap it, and as soon as your Pixel detects number-based menu options being provided, it’ll kick the feature in and start showing you any available options — set apart in buttons alongside a transcription of everything else the obnoxious phone system is saying to you:

JR Raphael, IDG

Still mildly annoying? Of course. But a noticeable step up from actually having to tune in and listen to all that gobbledegook in real-time? Holy customer service nightmare, Batman — is it ever.

Pixel calling trick #5: The complete call transcriber

Our next buried Pixel calling treasure is technically an Android accessibility feature. But while it provides some pretty obvious (and pretty incredible) benefits for folks who actually have hearing issues, it can also be useful for just about anyone in the right sort of situation.

It’s part of the Pixel’s Live Caption system, and it has a couple of interesting ways it could make your life a little easier. The system itself is available in English on the Pixel 2 and later and English, French, German, Italian, Japanese, and Spanish on the Pixel 6 series and later (tan elegante!).

To use it, just press either of your phone’s physical volume buttons while you’re in the midst of a call — then look for the little rectangle-with-a-line-through-it icon at the bottom of the volume control panel that pops up on your screen.

Tap that icon, confirm that you want to turn on the Live Caption for calls system, and then just wait for the magic to begin.

JR Raphael, IDG

How ’bout them apples, eh? Everything the other person says will be transcribed into text and put on your screen in real-time all throughout the call.

If you’ve got the Pixel 6 or higher, you can even have your phone translate the jibber-jabber into a different language on the fly. Those more recent Pixel models can also allow you to type out responses back and then have the affable genie within your gadget read your words aloud to the other person on your behalf — a fine way to have an actual spoken conversation without making a single peep, for times when both silence and voice-based communication are required.

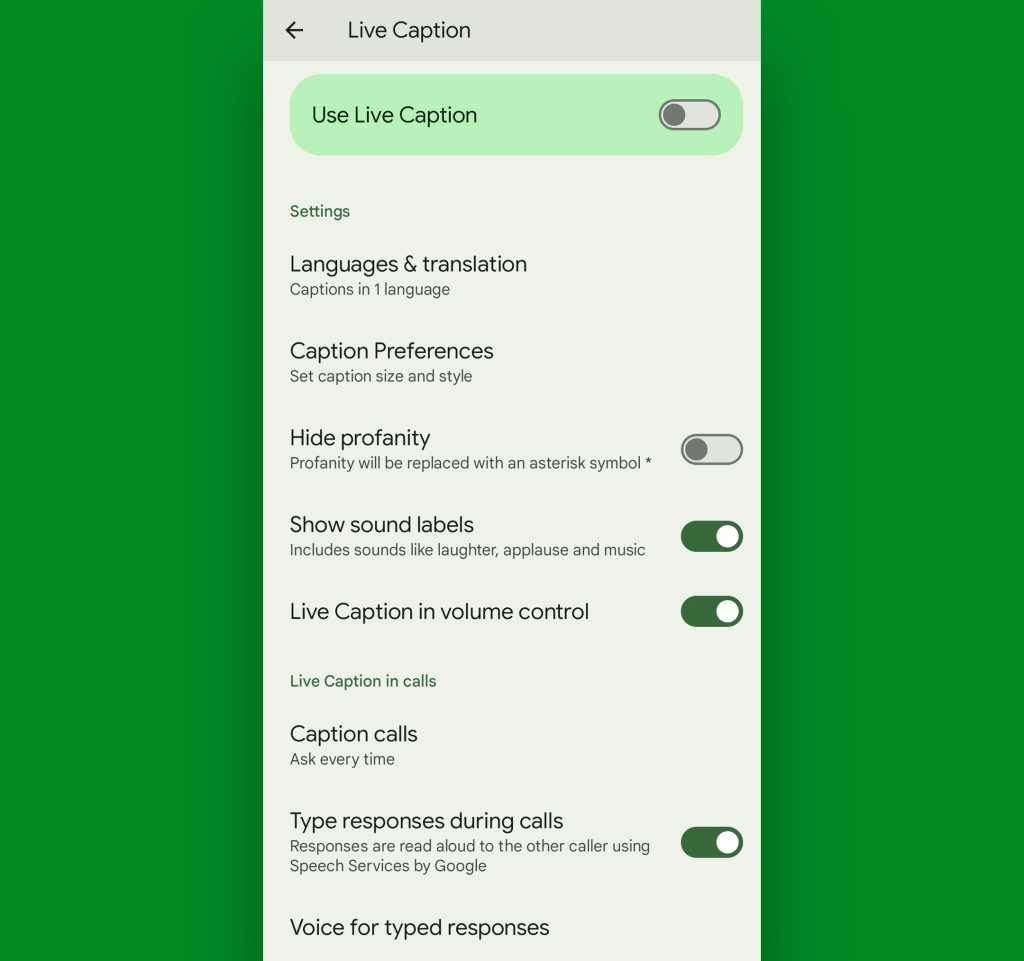

To activate that option and also configure the other Live Caption possibilities, head into the Sound & Vibration section of your system settings and tap the “Live Caption” line. There, you can enable the ability to “Type responses during calls” and also tell your Pixel to caption your calls always or never — or to prompt you every time to check.

JR Raphael, IDG

Just be sure to hit your volume button again once the call is done and turn the Live Caption system back off via that same volume-panel icon. Otherwise, the system will stay on and continue to caption stuff indefinitely (and also consume needless battery power while it’s doing it).

Pixel calling trick #6: The automated screener

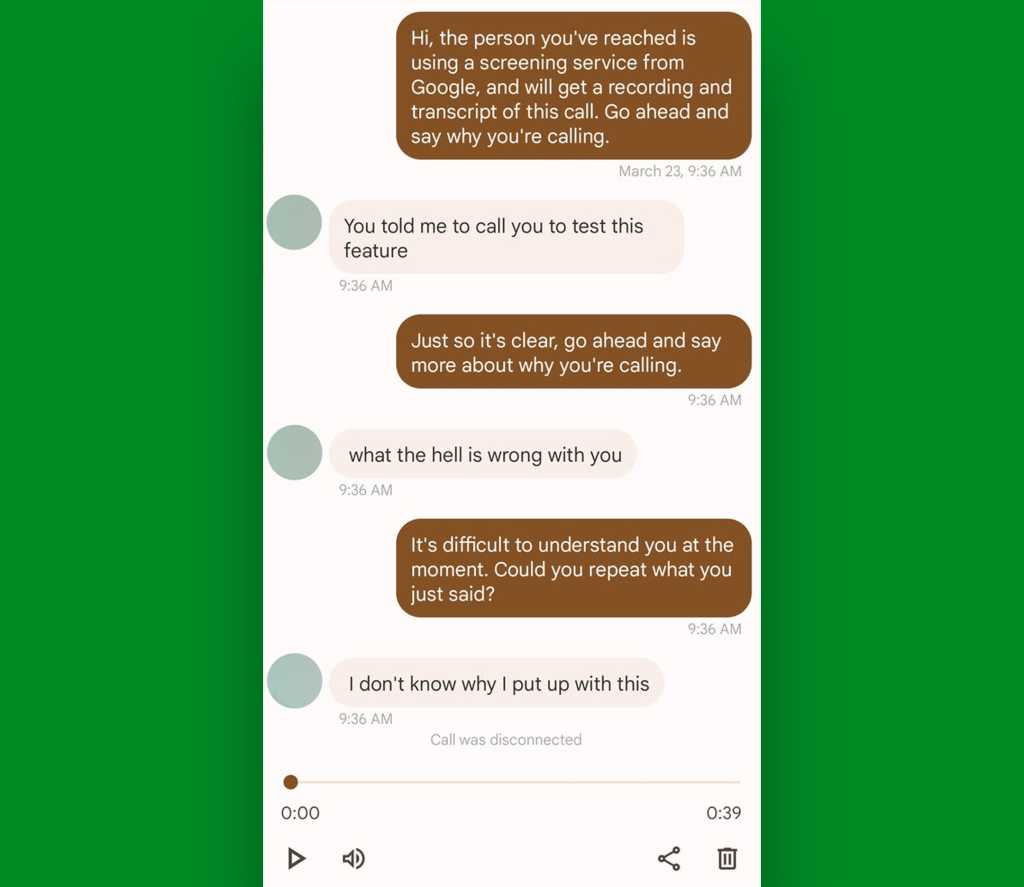

Perhaps my favorite tucked-away Pixel phone feature is one that brings us into the realm of incoming calls. It’s an extraordinarily sanity-saving system for screening calls as they come in to keep you from having to fritter away moments of your day talking to telemarketers, totally theoretical relatives named Ned, and anyone else you’d rather avoid.

This one’s available on all Pixel models but also only for English and in the US, as of now. The feature sort of works in a bunch of other countries, too, though without the automated element.

To set it up, head back into your Pixel’s Phone app and once again tap that three-dot menu icon followed by “Settings.” This time, find and select the line labeled “Call Screen.”

It may take the system a few minutes for your phone to activate the feature the first time you open it, but once it does, you can set up exactly how the system works and when it should kick in. The latest version of Google’s Pixel Call Screen gives you three simple paths to choose from, depending on how aggressively you want your Pixel to screen and filter calls for you:

- Maximum protection automatically kicks all unknown numbers (i.e. anyone who isn’t in your Google Contacts on Android) into the screening processes and auto-declines any calls determined to be spam on your behalf

- Medium protection takes things down a notch and screens only suspicious-seeming calls, while continuing to auto-decline spam

- And Basic protection declines only known spam calls, without all the extra screening

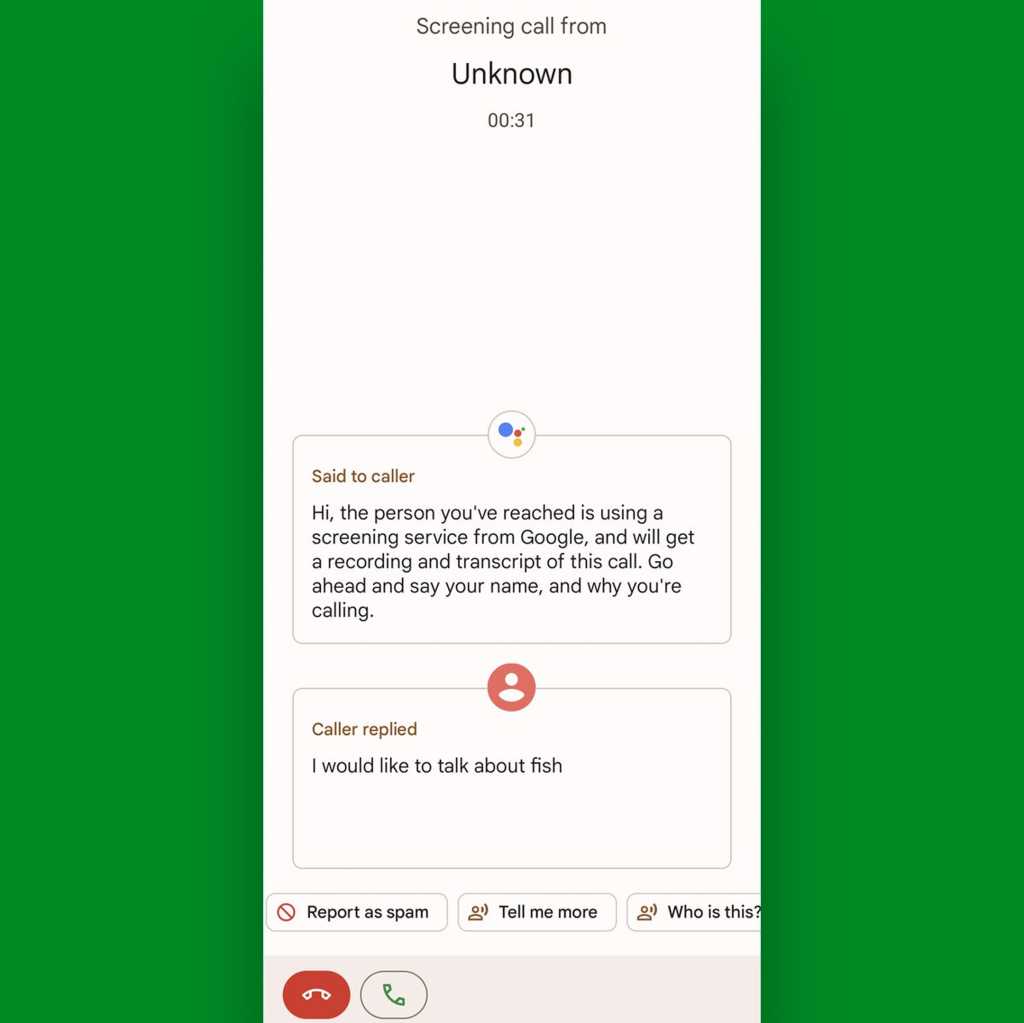

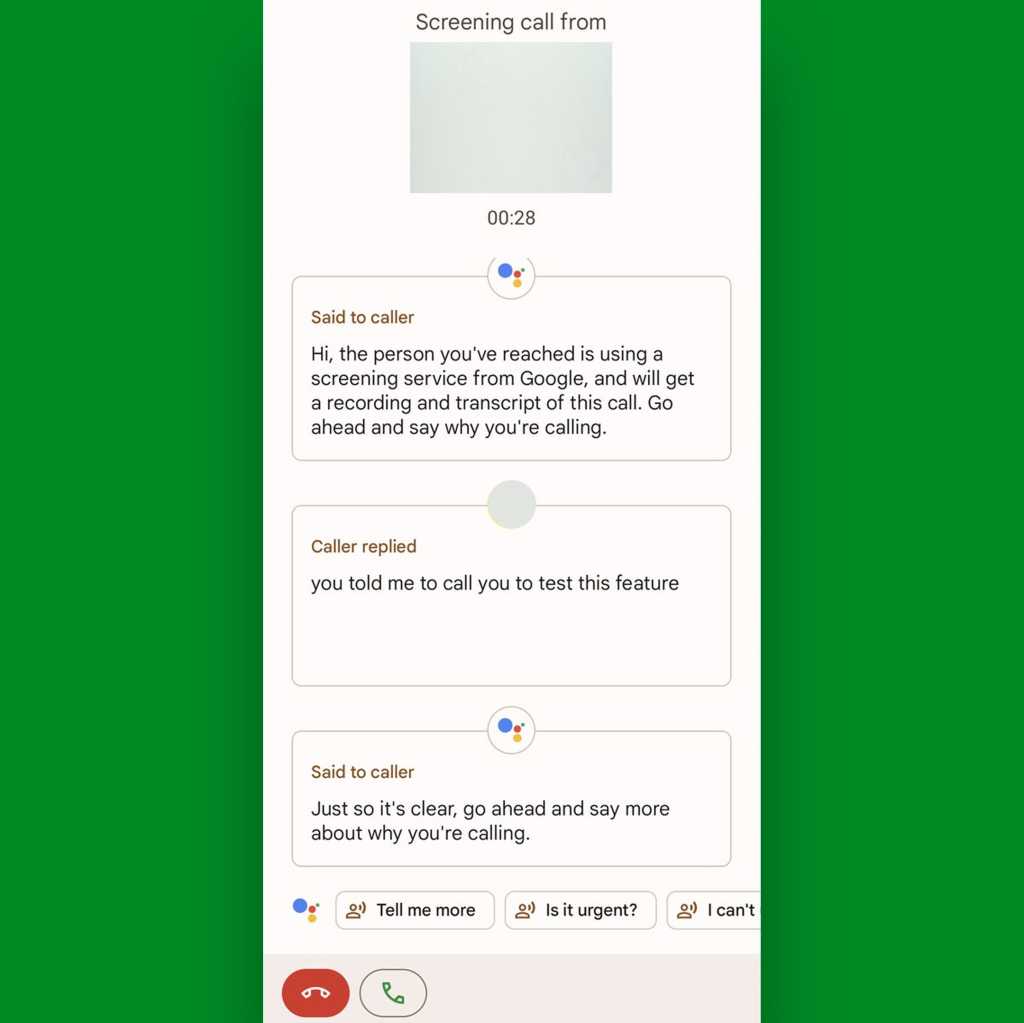

Pick whichever path you prefer, and the next time a person and/or evil spirit on the other line gets sent into screening, your phone will ring while showing a transcription of their response on the screen. That way, you can see what the call’s about before deciding if you want to pick up.

JR Raphael, IDG

And here’s the especially cool part: Once the system is set up and activated, you can also always activate it manually, too — even when a call is coming in from a known number. Just look for the “Screen call” command on the incoming call screen. Whoever’s calling will be asked what they want, and you’ll see their responses transcribed in real-time. You can then opt to accept or reject the call or even select one-tap follow-up questions to get more info (and/or annoy your co-workers, friends, and family — an equally valid use for the function, if you ask me).

JR Raphael, IDG

You can always find a full transcription and audio recording of those interactions in the Recents tab of your Phone app, too, in case you ever want to go back and review (and perhaps publish) ’em later (hi, honey!).

JR Raphael, IDG

Ah…efficiency.

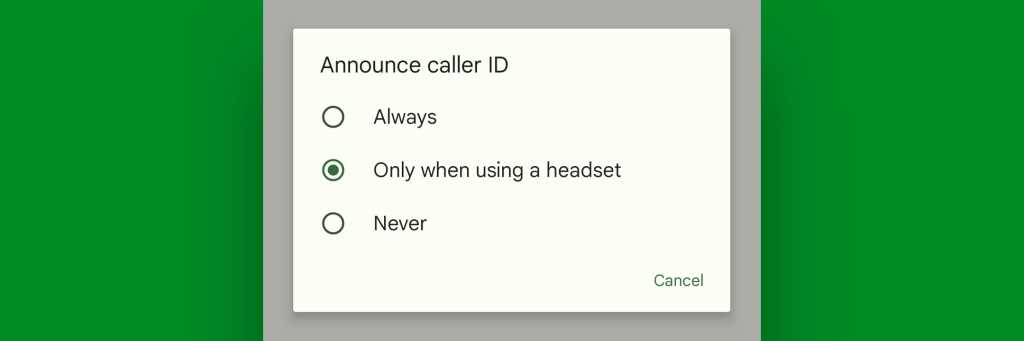

Pixel calling trick #7: The out-loud call announcer

Provided you work in a place where occasional noises aren’t a problem, another interesting way to stay on top of incoming calls is to tell your Pixel phone to read caller ID info out loud to you anytime a call comes in. That way, you can know who’s calling as soon as you hear the ringtone, without even having to find your phone or glance up from your midafternoon Wordle break Very Important Work Business™.

This one’s super-simple to set up: Just gallop your way over into the Phone app, tap that three-dot menu icon and select “Settings,” then look for the “Caller ID announcement” line way down at the bottom of the screen.

Tap it, and you’ll be able to choose to have incoming call announcements made always, never, or with the lovely middle-ground option of only when you’ve got a headset connected.

JR Raphael, IDG

And here’s an extra little bonus to go along with that: If you’ve got a Pixel 6 or higher and use English, Japanese, or German as your system language, you can even accept or reject a call solely by speaking a command out loud.

You only have to configure it once: Provided you have one of those devices, head into your Pixel’s system settings and type quick phrases into the search box at the top of the screen.

Tap “Quick phrases” in the list of results, then turn the toggle next to “Incoming calls” into the on position — and the next time a call starts a-ring-a-ring-ringin’, simply say “Answer” or “Decline” to have your Pixel do your bidding without ever lifting a single sticky finger.

Pixel calling trick #8: The polite rejecter

All right, so what if you get a call you know you want to avoid — but in an extra-polite way that prevents you from having to respond to a voicemail later? Well, fear not, for your Pixel has a fantastic feature for that very noble purpose.

The next time such a call comes in, look for the “Message” button on the incoming call screen, right next to that “Screen call” command we were just going over a minute ago. (And if your Pixel’s screen was on when the call started and you’re seeing the small incoming call panel instead of the full-screen interface, press your phone’s power button once. That’ll bump you back out to the standard full-screen setup, where you’ll see the button you need.)

Tap that command, and how ’bout that? With one more tap, you’ll be able to decline the call while simultaneously sending the person a charming message explaining the reason for your rejection.

JR Raphael, IDG

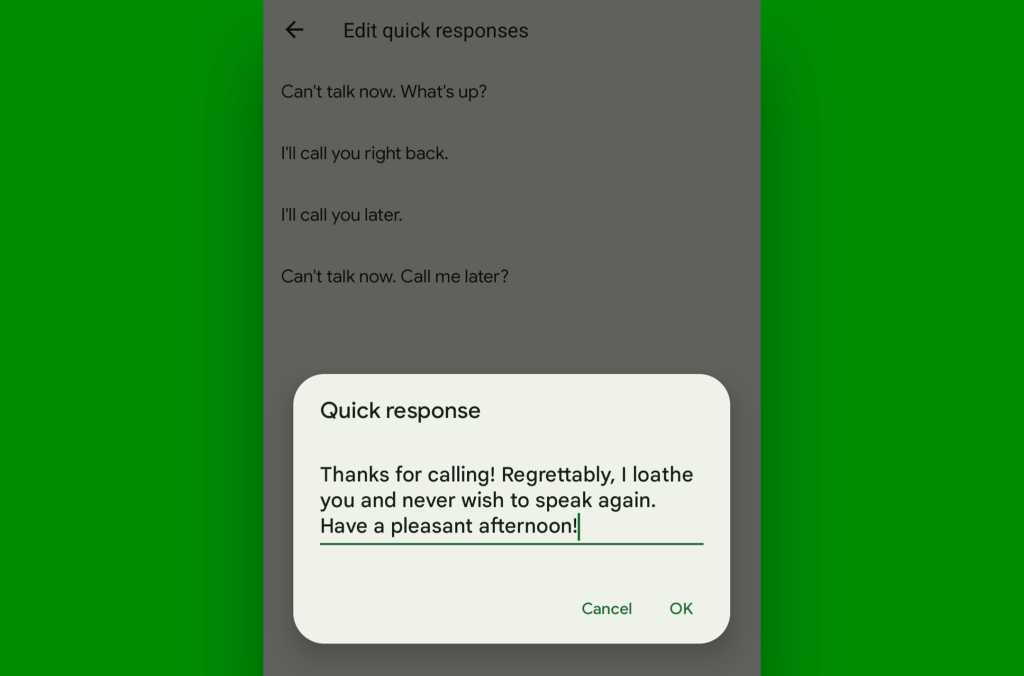

You can pick from a handful of prewritten texts or even opt to write your own message on the spot, if you’re feeling loquacious. You can also customize the default responses to make ’em more personalized and appropriate for your own friendly rejection needs. Just go back into the “Settings” area of your Pixel Phone app and look in the “Quick responses” section there to get started.

JR Raphael, IDG

Cordial, no? And speaking of simple dismissals, we’ve got one more important Pixel calling trick to consider…

Pixel calling trick #9: The simple silencer

Let’s be honest: No matter what type of pleasant-seeming ringtone you pick out on your phone — the gentle tolling of chimes, the majestic fwap! of an octopus violently flapping its limbs, or maybe even the soothing bellows of that weird guy who for some reason shouted in every single song by the B-52’s — something about the sound of a phone ringing always manages to raise one’s hackles.

Well, three things: First, on the Pixel 2 or higher, you can configure your phone to vibrate only when calls first come in — and then to slowly bring in the ring sound and increase its volume as the seconds move on. That way, the actual ringing remains as minimally annoying as possible and only begins (and also only gets loud) when it’s actually needed. To activate that option, look in the Sound & Vibration section of your system settings, tap either “Vibrate for calls” or “Vibration & haptics,” then make sure the toggle next to “Vibrate first then ring gradually” is in the on and active position.

Second, more recent Pixel models have a nifty “Adaptive alert vibration” feature that’ll intelligently turn down your device’s vibration strength anytime your phone is sitting face-up on a surface. That’s a nice way to make that buzzing a bit less bothersome when a call comes in in any such scenarios.

And finally, a super-simple possibility to remember: The next time your Pixel rings and you want to make it stop — whether you’re planning to answer the call or not — just press either of the phone’s physical volume buttons. It’s easy to do even with the phone in your pocket, and the second you do it, all sounds and vibrations will end. You’ll still be able to answer the call, ignore it, send a rejection message, scream obscenities at the caller with the knowledge that they’ll never hear you, or whatever feels right in that moment. But the hackle-raising sound will be silenced, and your sanity will be saved. Whew.

Remember: There’s lots more where this came from. Come join my completely free Pixel Academy e-course for seven full days of delightful Pixel knowledge — starting with some camera-centric smarts and moving from there to advanced image magic, next-level nuisance reducers, and oodles of other opportunities for advanced Pixel intelligence.

I’ll be waiting.