AlphaSense is a market intelligence platform that uses generative artificial intelligence (genAI) and natural language processing to help organizations find and analyze insights from sources like financial reports, news, earnings calls, and proprietary documents.

The purpose behind the platform is to allow business professionals to access relevant insights and make data-driven decisions.

Sarah Hoffman, director of AI research at AlphaSense, is an IT strategist and futurist. Formerly vice president of AI and Machine Learning Research at Fidelity Investments, Hoffman spoke with Computerworld about how AI will change the future of work and how companies should approach rolling out the fast-moving technology over the next several years.

In particular, she talked about how the arrival of genAI tools in business will allow workers to move away from repetitive jobs and into more creative endeavors — as long as they learn how to use the new tools and even collaborate with them. What will emerge is a “symbiotic” relationship with an increasingly “proactive” technology that will require employees to constantly learn new skills and adapt.

How will AI shape the future of work, in terms of both innovation and new workforce dynamics? “AI can manage repetitive tasks, or even difficult tasks that are specific in nature, while humans can focus on innovative and strategic initiatives that drive revenue growth and improve overall business performance. AI is also much quicker than humans could possibly be, is available 24/7, and can be scaled to handle increasing workloads.

“As AI automates more processes, the role of workers will shift. Jobs focused on repetitive tasks may decline, but new roles will emerge, requiring employees to focus on overseeing AI systems, handling exceptions, and performing creative or strategic functions that AI cannot easily replicate.

“The future workforce will likely collaborate more closely with AI tools. For example, marketers are already using AI to create more personalized content, and coders are leveraging AI-powered code copilots. The workforce will need to adapt to working alongside AI, figuring out how to make the most of human strengths and AI’s capabilities.

“AI can also be a brainstorming partner for professionals, enhancing creativity by generating new ideas and providing insights from vast datasets. Human roles will increasingly focus on strategic thinking, decision-making, and emotional intelligence. AI will act as a tool to enhance human capabilities rather than replace them, leading to a more symbiotic relationship between workers and technology. This transformation will require continuous upskilling and a rethinking of how work is organized and executed.

Why is Gen Z’s adoption of AI a signal for broader trends in business technology? “Gen Z, having grown up in a highly digital environment, is naturally more comfortable with technologies like AI. Their rapid adoption of AI tools highlights a shift towards technology-first thinking. As this generation excels in the workforce, their familiarity with AI will drive its integration into business processes, pushing companies to adopt and adapt to AI-driven solutions more quickly.

“Gen Z’s use of AI also reflects the broader understanding that AI complements human skills rather than replaces them. As businesses increasingly adopt AI, they will need to recognize the importance of training employees to work alongside AI, ensuring that AI becomes a valuable tool that enhances human creativity and strategic thinking.”

Sarah Hoffman

AlphaSense

What is AI’s role in business teams and how can companies best leverage it to enhance human skills and knowledge? “AI’s role in teams is to act as a tool that enhances human capabilities rather than [as] a complete replacement for human decision-making. Professionals can use AI to streamline routine tasks, such as data analysis and trend identification, which frees up time for more strategic and creative work. Additionally, AI can accelerate learning and innovation by synthesizing complex data, identifying new perspectives, and providing personalized insights.

“To best leverage AI to enhance human skills and knowledge, companies should:

- Define AI’s role clearly and establish specific tasks for AI, such as data processing or generating insights, and use it as a tool to support human judgment and decision-making.

- Regularly check AI’s outputs for accuracy and reliability to ensure its recommendations align with human expertise.

- Train teams effectively with the knowledge of when to trust AI’s recommendations and, importantly, when to rely on their own judgment and expertise.

- Enable effective collaboration between AI tools and humans. AI should complement human intelligence, helping teams work more efficiently, creatively, and strategically.”

What should companies prioritize to harness AI for long-term success? “Before companies can leverage this powerful technology and the business opportunities that come with it, they must consider the common pitfalls. Companies can build a proprietary system that may be the best fit for their customers or they can leverage third-party partnerships to mitigate the initial cost of building an AI system from the ground up. This is a pivotal decision that impacts future success and longevity. And the answer doesn’t have to be just build or buy; often a hybrid solution can make sense too, depending on the use cases involved.

“Companies should focus on long-term strategy, quality data, clear objectives, and careful integration into existing systems. Start small, scale gradually, and build a dedicated team to implement, manage, and optimize AI solutions. It’s also important to invest in employee training to ensure the workforce is prepared to use AI systems effectively.

“Business leaders also need to understand how their data is organized and scattered across the business. It may take time to reorganize existing data silos and pinpoint the priority datasets. To create or effectively implement well-trained models, businesses need to ensure their data is organized and prioritized correctly.

“It’s crucial to have alignment across teams to create a successful AI program. This includes developers, data analysts and scientists, AI architects and researchers and other critical roles that decide the overall business goals and objectives. These teams must work together closely to ensure there is consistency across development, product, marketing, etc.

“Another critical aspect for companies to consider is the end user. For AI to deliver long-term success, businesses must prioritize understanding the needs and expectations of those who will interact with or benefit from the technology. This involves gathering feedback from end-users throughout the development and implementation process to ensure the solutions being built provide real value.

“By focusing on these priorities, companies can ensure their workforce is prepared and AI programs are highly effective and ethically sound, positioning themselves for long-term success.”

What are some of the biggest advances you see happening with AI this year? “In 2025, generative AI will transition from its experimental phase to mainstream, product-ready applications across industries. Customer service automation, personalized content creation, and knowledge management are expected to lead this evolution.

“As more production-ready solutions are deployed, companies will refine methods to quantify AI’s impact, moving beyond time savings to include metrics like customer satisfaction, revenue growth, enhanced decision-making, and competitive advantage. These advancements will help executives make more informed investment decisions, accelerating generative AI adoption across industries.

“Generative AI systems will also become significantly more proactive, evolving beyond the passive ‘question-and-answer’ model to intelligently anticipate users’ needs. By leveraging a deep understanding of user habits, preferences, and contexts, these systems could predict and provide relevant information, assistance, or actions at the right moment. Acting as intelligent agents, they may even begin autonomously handling simple tasks with minimal input, further enhancing their utility and integration into everyday workflows.”

For what purposes do you see generative AI moving from pilot to production next year? “The leap from pilot projects to full-scale deployment is the next critical step for generative AI in 2025. While 2024 saw companies experiment with AI for efficiency — such as automating customer service queries or creating personalized content — these applications are expected to mature and deliver measurable business outcomes. As companies refine their data pipelines and AI infrastructure, these tools will likely become integral to daily operations rather than isolated experiments.

“Beyond efficiency, there’s a growing interest in leveraging AI for strategic innovation. For example, businesses may use generative AI to prototype new products, model market scenarios, or enhance customer experiences. These strategic applications could reshape industries by fostering innovation, increasing competitive advantage, and driving revenue growth.”

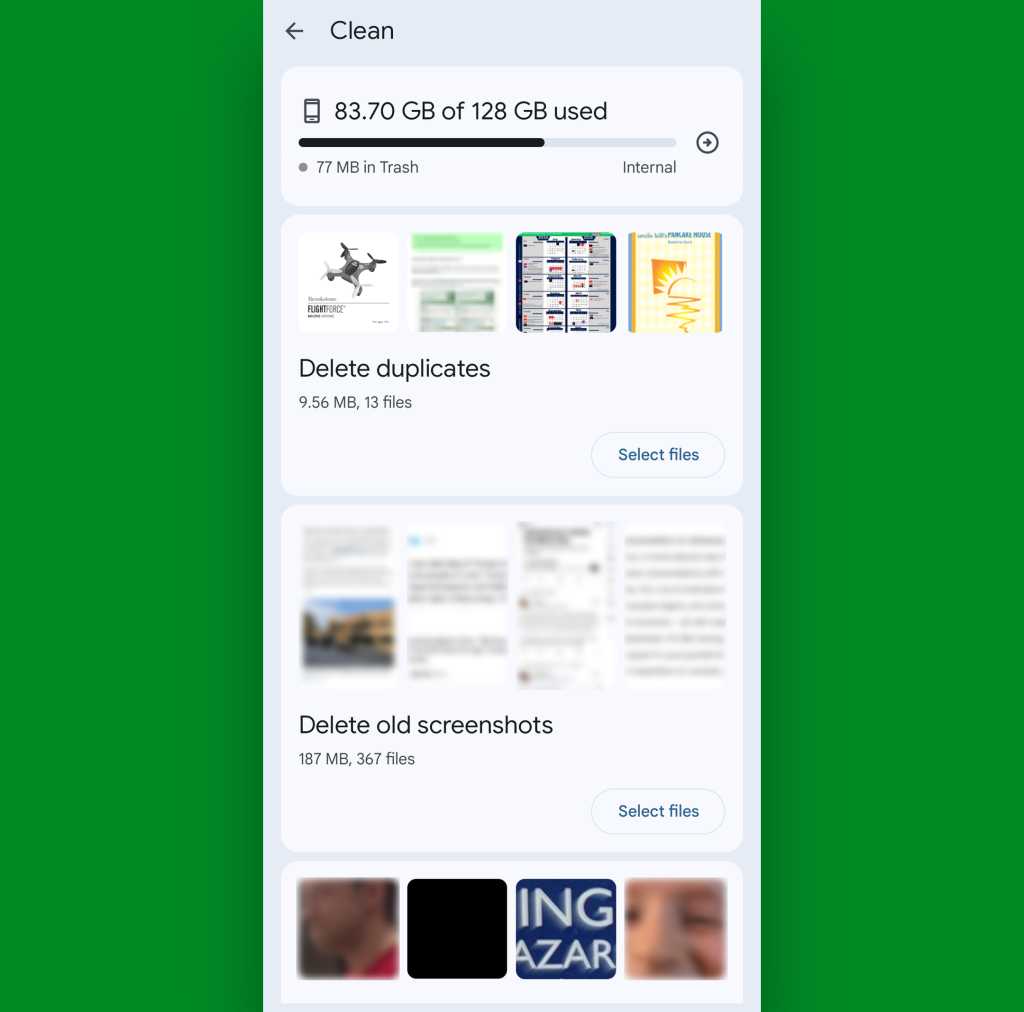

This past year, many organizations seemed to struggle with cleaning their data in order to prepare it for use by AI. Why do you believe that’s still necessary? “Data cleaning remains essential for ensuring AI reliability, even as models become more advanced. Generative AI systems depend on high-quality, consistent data to produce accurate results. Poorly prepared data can lead to biased outputs, reduced performance, and even legal risks in sensitive applications. By standardizing, de-duplicating, and enriching datasets, organizations ensure their AI systems are well-equipped to handle real-world complexity.”

How should companies go about ensuring the responses they get from genAI are accurate? “To ensure the accuracy of generative AI, businesses must employ rigorous testing and validation methods. Models should be evaluated against real-world datasets and specific benchmarks to confirm their reliability.

“Many companies are turning to retrieval-augmented generation (RAG), using domain-specific trusted and citable data to mitigate the risk of misinformation. This approach is particularly critical for applications like healthcare or financial decision-making where errors can have serious consequences. Similarly, in such high stakes functions, human oversight is essential.”

Companies that have deployed AI have used multiple models, but how do you create pipelines between those models and businesses for strategic purposes? “Rather than relying on a single provider, companies are adopting a multi-model approach, often deploying three or more AI models, routing to different models based on the use case. Continuous monitoring is necessary to ensure the models perform optimally, maintain accuracy, and adapt to changing business needs. “

Do you see smaller language models or the more typical large language models dominating in 2025 and why? “In 2025, the choice between smaller language models and large language models will ultimately depend on specific use cases. SLMs are invaluable for specified, narrow tasks that have use-case specific constraints around security, cost and latency. SLMs can be faster and cheaper to operate and can be deeply customized for domain workflows. For example, AlphaSense uses SLMs for earnings call summarization. Another advantage of SLMs is that they can be run on-device, which is critical for many mobile applications leveraging sensitive, personal data.

“LLMs, on the other hand, will dominate in general-purpose and complex applications requiring high-level reasoning, adaptability, and creativity. Their expansive knowledge and versatility make them essential for advanced research, multimodal content generation, and other sophisticated use cases. A hybrid approach will likely define the AI landscape in 2025, combining the efficiency of SLMs with the versatility of LLMs, enabling businesses to optimize performance, cost, and scalability.”