The release of the latest version of the Chinese genAI bot DeepSeek last month upended the tech world when its creators claimed it was built for only $6 million — far less than the hundreds of billions of dollars Microsoft, Google, OpenAI, Meta, and others have poured into genAI development.

The shockwaves were immediate. GenAI-related stocks took a nosedive, losing hundreds of billions of dollars in value overnight. Many prognosticators said DeepSeek would undermine America’s genAI dominance — and threaten the country’s big AI companies, notably Microsoft.

Microsoft, which became a $3-trillion company based on its AI leadership, has perhaps the most to lose from DeepSeek’s arrival. It’s invested billions of dollars in AI already, and has said this year alone it will invest another $80 billion. Given that DeepSeek said it built its newest chatbot so cheaply, is Microsoft throwing billions of dollars away? Can it compete with a company that can build genAI at such a low cost?

Microsoft has nothing to fear from DeepSeek. Here are three reasons the Chinese upstart won’t hurt Microsoft — and might even help it.

DeepSeek’s savings aren’t as large as it claims

DeepSeek’s claim that it developed the latest version of its chatbot for $6 million was eye-popping, given the amount of money being poured into AI development and related infrastructure by so many other companies. It was even more eye-popping because the chatbot appears to be technically on par with OpenAI’s ChatGPT, which underlies Microsoft’s Copilot.

But DeepSeek’s claim was extremely misleading. The semiconductor research and consulting firm SemiAnalysis took a deep dive into the true costs of developing DeepSeek, based on information publicly provided by the Chinese company. SemiAnalysis found that the $6 million was “just the GPU cost of the pre-training run, which is only a portion of the total cost of the model. Excluded are important pieces of the puzzle like R&D and TCO of the hardware itself.”

Hardware costs, SemiAnalysis found, were likely well over a half billion dollars. It estimates that the total capital expenditure costs for the hardware, including the costs of operating it, were approximately $1.6 billion.

Beyond that, OpenAI claims that DeepSeek may have illegally used data created by OpenAI to train its model. The cost of obtaining training data can be billions of dollars, so we don’t know how much money DeepSeek would have had to spend if it didn’t use OpenAI’s data.

Although it’s still likely that DeepSeek spent much less than OpenAI, Microsoft, and competitors for building its model, its costs are likely in the billions of dollars, not a mere $6 million. And it’s not at all clear that DeepSeek can gain enough revenue to keep up its burn rate.

Businesses fear privacy and security breaches — and Chinese censorship

Cost savings are good. But even more important to most enterprises is the privacy and security of their data and business, and the privacy and security of their customers’ data.

Congress passed a law banning TikTok from the US based on fears that data is being gathered about users of the app and sent back to China. (US President Donald J. Trump has put a temporary hold on that ban.) But the kind of data that TikTok might gather and report back to China pales in comparison with the kinds of data DeepSeek might send. TikTok merely lets people post and watch videos. DeepSeek’s genAI chatbot has access to the sensitive personal, business, and financial data of enterprises and individuals that use it.

DeepSeek’s privacy policy admits upfront that it sends business and personal data to China, noting, “We store the information we collect in secure servers located in the People’s Republic of China.” The policy adds, “We may collect your text or audio input, prompt, uploaded files, feedback, chat history, or other content that you provide to our model and Services.”

Beyond that, Wired magazine adds: “DeepSeek says it will collect information about what device you are using, your operating system, IP address, and information such as crash reports. It can also record your ‘keystroke patterns or rhythms.’”

What might DeepSeek do with that data? Chinese companies are required by Chinese law to turn over any information to the Chinese government when requested. American businesses are unlikely to want to expose their data to the Chinese government in that way.

In addition, DeepSeek heavily censors its answers to requests, refusing to answer some questions, and providing Chinese propaganda for others, according to The New York Times. Businesses certainly don’t want to become arms of the Chinese government’s propaganda efforts.

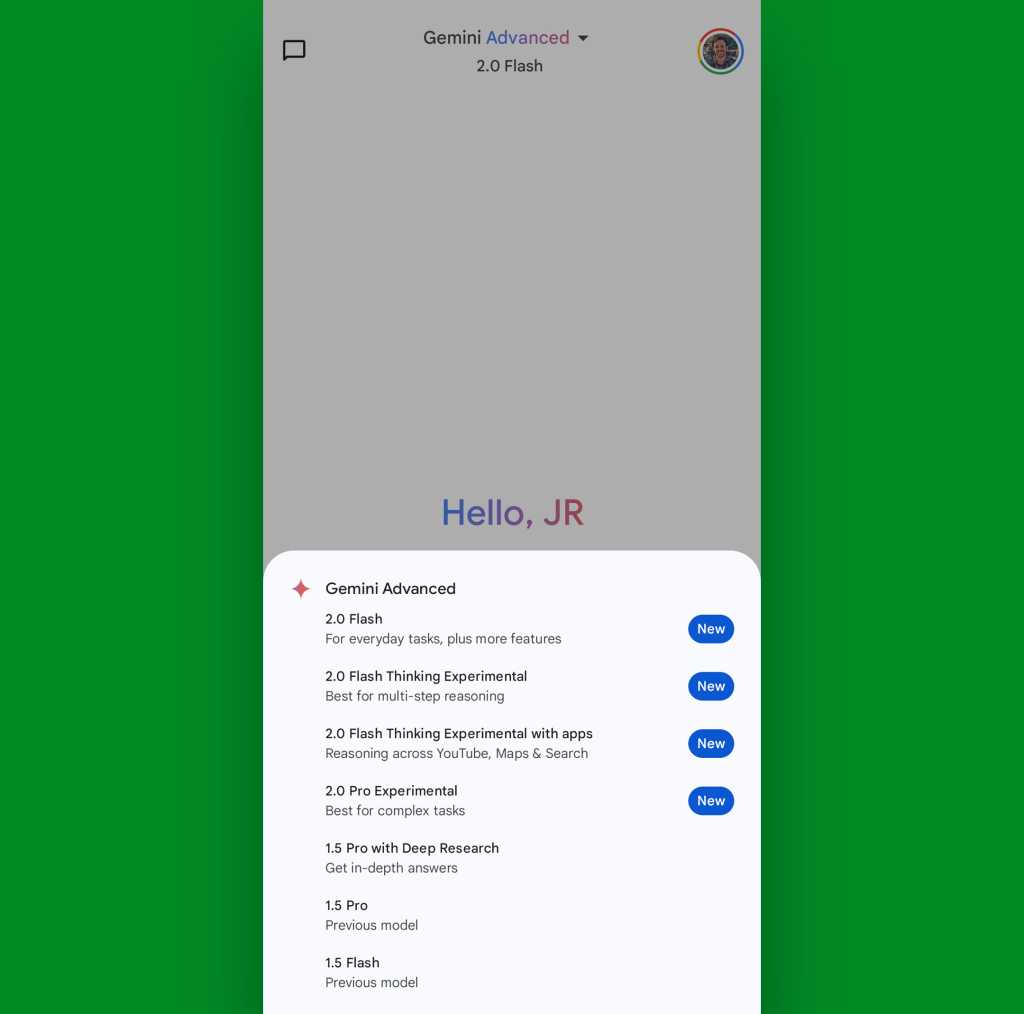

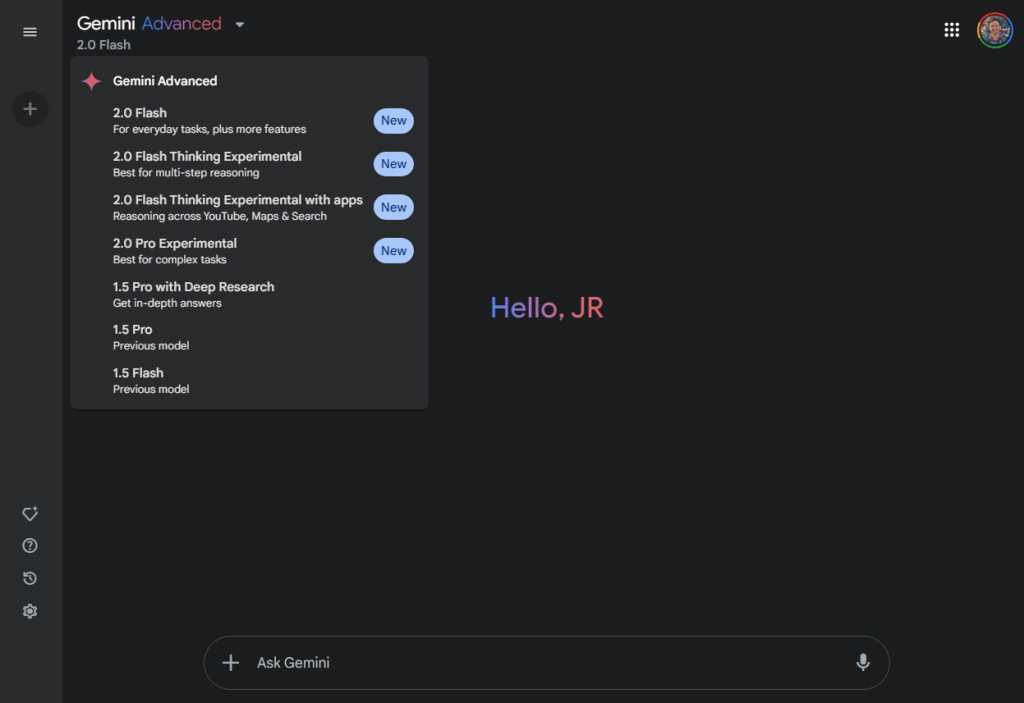

Enterprises want off-the-shelf AI integration with business tools

What businesses want from genAI tools, above all, is to increase their productivity. Doing that requires integration with their applications, tools, and infrastructure. That’s exactly what Microsoft does with its entire Copilot product line, including Microsoft 365, OneDrive, SharePoint, Teams, GitHub, Microsoft’s CRM and ERP platform Dynamics 365, and others.

DeepSeek offers nothing like that kind of integration. And without that, DeepSeek isn’t likely to make much progress against Microsoft — even if it can sell its chatbot more cheaply.

Microsoft itself doesn’t seem to be concerned, at least publicly. Microsoft CEO Satya Nadella even believes that the efficiencies DeepSeek has found in building AI will ultimately help his company’s bottom line.

“That type of optimization means AI will be much more ubiquitous,” he told Yahoo Finance. “And so, therefore, for a hyperscaler like us, a PC platform provider like us, this is all good news as far as I’m concerned.”